Preferred Citation: Foote, Susan Bartlett. Managing the Medical Arms Race: Innovation and Public Policy in the Medical Device Industry. Berkeley: University of California Press, c1992 1992. http://ark.cdlib.org/ark:/13030/ft5489n9wd/

| Managing the Medical Arms RacePublic Policy and Medical Device InnovationSusan Bartlett FooteUNIVERSITY OF CALIFORNIA PRESSBerkeley · Los Angeles · Oxford© 1992 The Regents of the University of California |

To my children,

Rebecca and Benjamin

Preferred Citation: Foote, Susan Bartlett. Managing the Medical Arms Race: Innovation and Public Policy in the Medical Device Industry. Berkeley: University of California Press, c1992 1992. http://ark.cdlib.org/ark:/13030/ft5489n9wd/

To my children,

Rebecca and Benjamin

PREFACE

This book provides a comprehensive look at the wide range of public policies that affect innovation in the medical device industry. It is a case study of the interaction between technology and policy—the relationship between private industry and government institutions. It is a tale that appeals to our most optimistic views about the ability of American know-how to diminish pain and suffering. It is also the story of technological failures and disappointments, a story that reveals the strengths and weaknesses of America's public and private institutions. Because we all live and will probably die surrounded by medical devices, the consequences of this interaction between government and business affect the health care choices that we as patients will have in the future.

The products of the medical device industry are probably more familiar to readers than the term medical device might convey. There is considerable popular interest in such innovations as the controversial intrauterine device (IUD), lithotripsy machines that crush kidney stones without surgery, angioplasty performed with lasers that remove plaque deposits from coronary arteries, and life-support systems for premature infants, to name only a few of the over 3,500 different products on the market.

Despite the importance of medical device innovations, the interrelationship of the industry to government policy is neither well studied nor well understood. Indeed, knowledge of the device industry has not expanded as rapidly as its relative importance to health care. There are good and useful data and analyses, but they are scattered across many fields or cover only a limited amount of the story told here. There is extensive literature on innovation, but it often diminishes or ignores the impact of public policy.[1]

An excellent example is the recent work by Manuel Tratjenberg, Economic Analysis of Product Innovation: The Case of CT Scanners (Cambridge: Harvard University Press, 1990). In this work, the author uses the CT scan to quantify and analyze the notion of product innovation. However, he devotes only a scant four pages to the regulatory environment.

There are excellent studies of particular public institutions, upon which I have relied, but they do not focus exclusively on medical devices.[2]For work on the FDA, see Peter Temin, Taking Your Medicine: Drug Regulation in the United States (Cambridge: Harvard University Press, 1980); and Richard A. Merrill and Peter Barton Hutt, Food and Drug Law (Mineola, N.Y.: Foundation Press, 1980). For the National Institutes of Health, see Victoria A. Harden, Inventing the NIH: Federal Biomedical Research Policy 1887-1937 (Baltimore: Johns Hopkins University Press, 1976); and Steven P. Strickland, Politics, Science, and Dread Disease (Cambridge: Harvard University Press, 1972). Louise B. Russell, Medicare's New Hospital Payment System: Is It Working? (Washington, D.C.: The Brookings Institution, 1989). These references are illustrative of the work in the field. More complete citations accompany the subsequent chapters.

There are also excellent casestudies of individual medical device technologies, including kidney dialysis, artificial organs, and the artificial heart.[3]

Richard A. Rettig, "Lessons Learned from the End-Stage Renal Disease Experience," in R. H. Egdahl and Paul M. Gertman, eds., Technology and the Quality of Health Care (Germantown, Md.: Aspen Systems, 1978). Alonzo Plough, Borrowed Time: Artificial Organs and the Politics of Extending Lives (Philadelphia: Temple University Press, 1986). Natalie Davis Spingarn, Heartbeat: The Politics of Health Research (Washington, D.C.: Robert B. Luce, 1976).

Much of the groundwork for this book has been laid by the technical studies of the Office of Technology Assessment, a research division of the U.S. Congress.[4]

U.S. Congress, Office of Technology Assessment, Federal Policies and the Medical Devices Industry (Washington, D.C.: GPO, October 1984) and Medical Technology and the Costs of the Medicare Program (Washington, D.C.: GPO, July 1984). Other government studies of importance, including reports and investigations by the General Accounting Office, are cited in subsequent chapters.

Health policy analysts have examined important aspects of the medical device industry, and my debt to them is gratefully acknowledged.[5]See, for example, Karen E. Ekelman, ed., New Medical Devices: Factors Influencing Invention, Development, and Use (Washington, D.C.: National Academy Press, 1988); and H. David Banta, "Major Issues Facing Biomedical Innovation," in Edward B. Roberts, ed., Biomedical Innovation (Cambridge: MIT Press, 1981).

The extensive citations throughout this book testify to the serious scholarship that is relevant to an understanding of the issues addressed.My aim is to provide a more comprehensive overview of the interaction of public policy and innovation than is currently available. I have been interested in medical device technology and policy for well over a decade, and sections of this book draw on my previous work.[6]

See, for example, Susan Bartlett Foote, "Loops and Loopholes: Hazardous Device Regulation under the 1976 Medical Device Amendments to the Food, Drug, and Cosmetics Act," Ecology Law Quarterly 7 (1978): 101-135; "Administrative Preemption: An Experiment in Regulatory Federalism," Virginia Law Review 70 (1984): 1429-1466; "From Crutches to CT Scans: Business-Government Relations and Medical Product Innovation," in James E. Post, ed., Research in Corporate Social Performance and Policy 8 (Greenwich, Conn.: JAI Press, 1986), 3-28; "Coexistence, Conflict, Cooperation: Public Policies toward Medical Devices," Journal of Health Politics, Policy and Law 11 (1986): 501-523; "Assessing Medical Technology Assessment: Past, Present, and Future," Milbank Quarterly 65 (1987): 59-80; "Product Liability and Medical Device Regulation: Proposal for Reform," in Karen E. Ekelman, ed., New Medical Devices: Factors Influencing Invention, Development, and Use (Washington, D.C.: National Academy Press, 1988), 73-92; and "Selling American Medical Equipment in Japan," California Management Review 31 (1989): 146-161.

I hope to do for medical devices what Paul Starr accomplished for the medical profession, Charles Rosenberg and Rosemary Stevens have done for hospitals, and Henry Grabowski has contributed to our understanding of the pharmaceutical industry.[7]Paul Starr, The Social Transformation of American Medicine (New York: Basic Books, 1982); Charles E. Rosenberg, The Care of Strangers: The Rise of America's Hospital System (New York: Basic Books, 1987); Rosemary Stevens, In Sickness and in Wealth, American Hospitals in the Twenty-First Century (New York: Basic Books, 1989); and Henry Grabowski and J. M. Vernon, The Regulation of Pharmaceuticals: Balancing the Benefits and Risks (Washington, D.C.: American Enterprise Institute, 1983). See also Grabowski, Drug Regulation and Innovation: Empirical Evidence and Policy Options (Washington, D.C.: American Enterprise Institute, 1976). The pharmaceutical industry has been well studied. See also Jonathan Liebernau, Medical Science and Medical Industry: The Formation of the American Pharmaceutical Industry (Baltimore: Johns Hopkins University Press, 1987).

A formidable task indeed!The discussion is accessible to all readers interested in medical technology and health policy regardless of training or expertise. Too often, the temptation among academics is to speak only to their own kind, communicating in the shorthand of their scholarly disciplines. Such a self-protective ploy is not possible here because of the wide range of issues and the breadth of the potential audience. The challenge, which I hope has been met, is to tell the story without cloaking the message in unnecessary technical jargon.

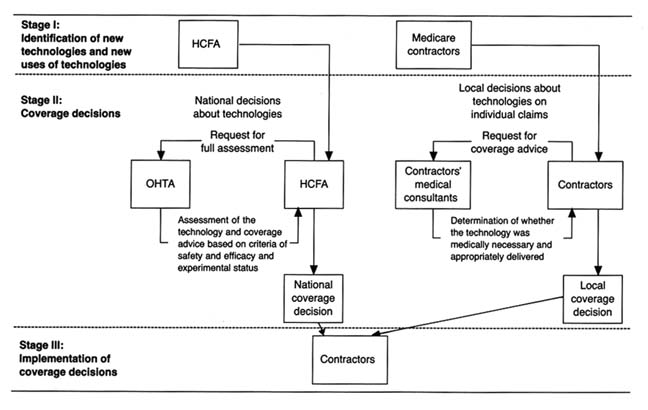

This book is also designed to add to the more specialized knowledge of experts. These readers may benefit from exposure to areas of policy that their own work does not address. For example, the analyst who spends his days implementing Medicare policy at the Health Care Financing Administration in Washington (HCFA) may not realize the relevance of product liability or federal regulation to the very innovations he assesses for payment or coverage purposes. The same is true for the physician who may know much about certain medical devices but little about the role of government in determining their cost and availability and for the product manager who may see the government only as a barrier to her marketing plan.

Students of business-government relations will find that the medical device industry provides an interesting case study of policy proliferation problems that affect other sectors of the economy as well. Extensive chapter references should help all readers pursue particular issues in greater depth.

In writing this book, I personally encountered the negative and the positive aspects of medical devices. I brought the manuscript along when I took my mother for radiation treatments during her valiant, but losing, battle with lung cancer. There seemed no end to the technological fixes that offered false hopes and that only prolonged pain and suffering. I saw firsthand the role of technology as a substitute for the caring side of medicine. On a more optimistic note, I brought this manuscript to the hospital when I underwent two operations on my own back. These procedures were successful in large part because of the advanced state of diagnostic imaging and the whole range of technological innovations in surgical technique. While this form of field research is one I do not care to repeat, I acquired new respect for these costly, yet effective and beneficial, technological advances.

Of course, my exposure to medical technology is not unique. All of us have felt the impact of the health care system and its technology on ourselves and our families. The innovations and the institutions discussed in this book inevitably touch us all.

No book is complete without acknowledgments. I am indebted to colleagues in the Business and Public Policy Group at the Walter A. Haas School of Business at the University of California, Berkeley, including Budd Cheit, Edwin Epstein, Robert Harris, David Irons, David Mowery, Christine Rosen, and David Vogel, who commented on various drafts. Others at Berkeley provided useful critique and support, including Sally Fairfax, Judy Gruber, Charles O'Reilly, and Franklin Zimring. Dr. Stephen Bongard recently of the Institute of Medicine and Dr. Peter Budetti of the George Washington University School of Medicine shared their expertise and experiences at various stages of this project.

Students of law and business at Berkeley, including Richard S. Taylor, Ann R. Shulman, Kenneth Koput, Dr. David Okuji, Dr. Michael J. Sterns, Brian Shaffer, and Emerson Tiller, provided able research assistance, and the Harris Trust at the Institute for

Governmental Studies at Berkeley and the Program in Business and Social Policy supplied funds for their support. I am also grateful to the Institute of Medicine at the National Academy of Sciences, which provided forums for thoughtful analysis and debate of important health policy issues in which I was invited to participate. Serena Joe helped with typing the manuscript and Patricia Murphy provided meticulous word processing and editorial advice.

When the urge to abandon was strongest, I was encouraged by William Lowrance of The Rockefeller University, who believed in the idea and sustained me in the early stages. I am grateful to Robert Coleman, who could always make me laugh, and to Ed Freeman of the University of Virginia, who knew there was a story to be told.

During the 1990–1991 academic year, I was fortunate to have been selected as a Robert Wood Johnson Congressional Health Policy Fellow. I extend deep appreciation to Marion Ein Lewin, the director of the fellowship, and to the five other RWJ fellows who served as colleagues, teachers, and most of all as friends. I am especially indebted to Senator Dave Durenberger and his exceptional staff, who allowed me to participate in the Senate health policy process in the first session of the 102d Congress.

PART ONE

THE DIAGNOSIS

The machine itself makes no demands and holds out no promises: it is the human spirit that makes demands and keeps promises.

Lewis Mumford Technics and Civilization

1

The Diagnostic Framework

The gains in technics are never registered automatically in society; they require equally adroit inventions and adaptations in politics; and the careless habit of attributing to mechanical improvements a direct role as instruments of culture and civilization puts a demand upon the machine to which it cannot respond….

No matter how completely technics relies upon the objective procedures of the sciences, it does not form an independent system, like the universe; it exists as an element in human culture and it promises well or ill as the social groups that exploit it promise well or ill. The machine itself makes no demands and holds out no promises: it is the human spirit that makes demands and keeps promises.

Lewis Mumford Technics and Civilization

The Problem

Medical devices, the "technics" of this book, pervade our experiences with health care throughout our lives—from fetal monitoring equipment and ultrasound imaging before birth to life-support systems and even suicide machines when death is near.[1]

Lewis Mumford, Technics and Civilization (New York: Harcourt Brace Jovanovich, 1934).

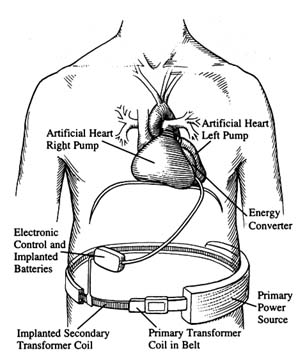

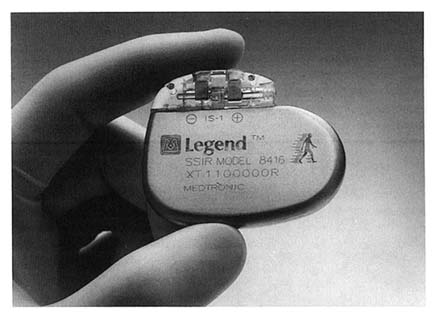

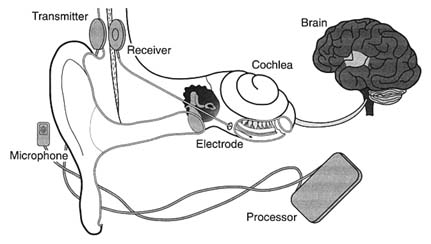

Medical care has become increasingly dependent on technology, and medical devices are the epitome of the trend. The modern hospital is a wonderland of complicated machinery, and the rhythmic beeping of heart monitors evokes a life-and-death drama. Tens of thousands of Americans depend upon artificial body parts for survival and to improve the quality of their lives—fromhips to intraocular lenses to heart pacemakers. Even doctors' offices are full of new devices. Physicians use lasers to remove cataracts without the need for hospitalization; ultra-sound monitors take pictures of unborn babies. The allure of medical innovation is powerful, holding out the possibility of a perfect outcome, an amelioration of pain, a delay in our inexorable decline toward death.

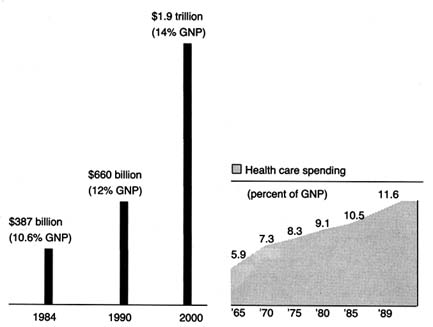

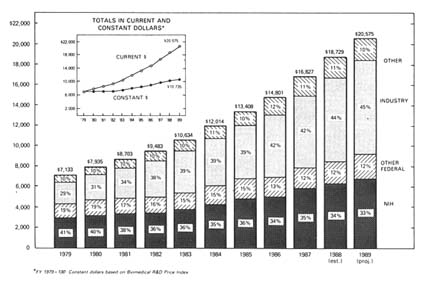

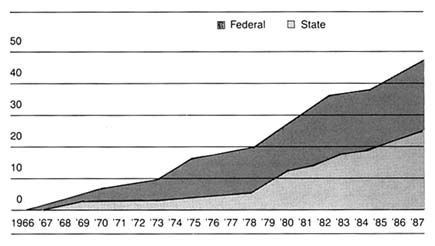

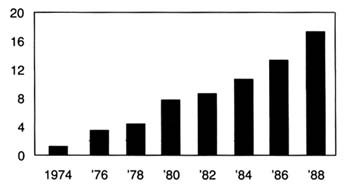

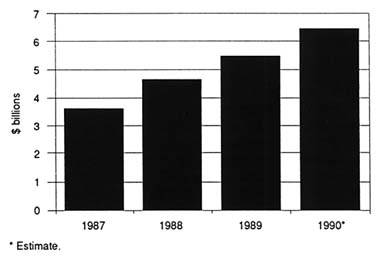

Spending on medical devices reflects this trend. This multi-billion dollar industry producing thousands of products may account for as much as 40 percent of the health care bill, which has grown to close to 12 percent of the gross national product.[2]

Forecasts placed health care as rising from 11.9 percent of the GNP in 1990 to 13.1 percent in 1995. Alden Solovg, "Recession Prospects Mixed Bag for Health Care," cited in Medical Benefits 6 (30 August 1989).

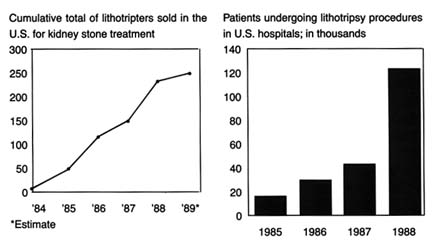

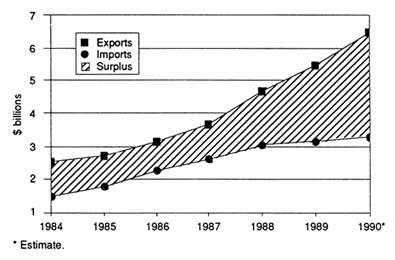

(See figure 1.) The demand seems insatiable. We have come to expect a steady stream of new "miracles." Experts predict bloodless laser surgery in the near future and a genetic engineering revolution at the turn of the century. "At the current rate of innovation," an analyst recently projected, "by the year 2000 close to 100,000 new or enhanced medical devices will be introduced into the marketplace. Health care will be a $1.5 trillion industry."[3]Russell C. Coile, "Advances in the Next Decade Will Make Today's Technology Seem Primitive," cited in Medical Benefits 6 (30 August 1989).

Demand is fueled by our belief in equitable access to medical care. When a medical innovation is considered beneficial, there is pressure to distribute its benefits to all who need it. News stories of people denied access to high-cost treatments because of their inability to pay generate public sympathy and, often, outrage. Until very recently, the ideal of the highest quality care for everyone has been rarely questioned, albeit unattained.

Medical technology is not without critics who challenge any unquestioning belief in its value. Some argue that in our love affair with technology we have sacrificed the caring, or service, side of medicine.[4]

Stanley Joel Reiser, Medicine and the Reign of Technology (Cambridge: Cambridge University Press, 1978).

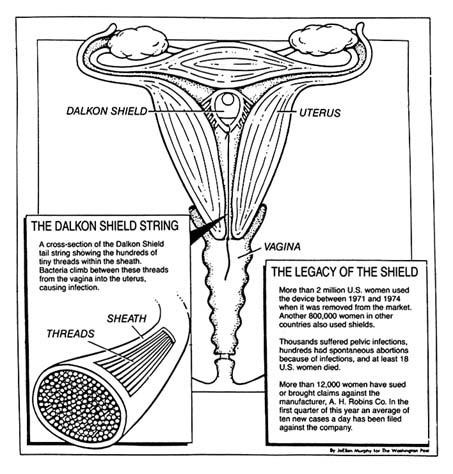

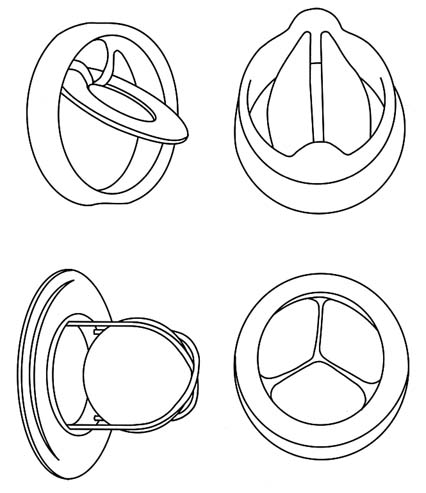

The public is also intolerant of technologies that cause harm. The dangers of the Dalkon Shield, an intrauterine device (IUD) that injured thousands of women, received widespread publicity. In a recent controversy, the government has charged that a medical device producer marketed heart valves it knew to be defectively designed, leaving thousands in daily fear that their implanted devices will fail and kill them.[5]The risks of the Bjork-Shiley heart valve, an implanted disk that controls the flow of blood through the heart, received publicity in 1990. The valve has a tendency to fail in some cases, leading to the deaths of recipients. The legal and regulatory issues raised by this medical device are discussed in chapter 6.

Some blame medical technology for the escalating costs of care as well. There is no question that some technology is expensive, but other products reduce costs through early diagnosis or

Figure 1. Forecasted health spending.

Sources: (a) M. S. Freeland et al., Health Care Financing Review 6 (Spring 1985): 1–20;

and K. W. Tyson and J. C. Merrill, Journal of Medical Education 59 (1984): 773–81;

(b) Health Care Financing Administration.

shorter hospital stays. Pressures to control costs have led to calls for elimination of wasteful or unnecessarily expensive equipment. Exposure of fraud in the cardiac pacemaker industry and of unnecessary implants of intraocular lenses lend credence to this charge.[6]

Cardiac pacemakers are discussed in chapters 4 and 5; intraocular lenses are studied in chapter 7.

Even highly desirable technologies are controversial. There can be tension between providing access to expensive lifesaving devices to a few and more widespread access to lower-cost benefits to many. In a recent policy decision, Oregon medical officials declared that the Medicaid program, which serves the poor in that state, would no longer pay for liver transplants, a desirable high-cost, lifesaving technology, in order to use the funds to cover prenatal care for a larger number of low-income pregnant women. The state also recommended a priority list to determine which treatments would be covered under the program.[7]

See Karen Southwick, "Oregon Blazing a Trail with Plan to Ration Health Care," Healthweek, 12 March 1990, 30, 33. To implement its plan, Oregon needs waivers from some of the federal Medicaid requirements. In expanding coverage for poor families by restricting benefits, Oregon would violate a requirement that families receiving federal aid automatically receive full Medicaid coverage as well. Waivers can be granted administratively or through Congress. There is much political controversy about the rationing scheme. By the end of 1990, Oregon was revising its final priority list, and Congress was in a "wait-and-see" mode. Virginia Morell, "Oregon Puts Bold Health Plan on Ice," Science 249 (3 August 1990): 468-471.

This isone example of the many explicit and implicit tradeoffs that are made as public and private budgets are stretched to the limit.

Medical device innovations—with lifesaving promises, potential risks, and often high price tags—are deeply embedded in the broad debates about health policy. The insatiable demand, fed by a profusion of new technologies and the profits they represent, has been called the medical arms race. Increasingly, government has been called upon to manage it.

This book explores the "adroit inventions and adaptations in politics" that have accompanied the profusion of medical devices in our society. As government has become inextricably linked to health services, public policies affecting medical devices have proliferated. These policies reflect diverse values and arise from many different institutions. Because of the pervasiveness of public policy, the medical device industry can only be understood in relation to the policy environment. Indeed, the industry grew and matured in response to public policy incentives. Those responses, in turn, brought new layers of public policy.

A medically related analogy will help to structure the discussion. Policy proliferation is analogous to polypharmacy, an increasingly familiar condition to the health care profession. Polypharmacy occurs when a patient takes a number of prescription drugs. Each prescription may have been given for a legitimate ailment, but the interactions between the drugs can harm the patient. Attentive doctors routinely request that patients put all their drugs in a brown paper bag and bring them in for review. The review evaluates what is known about the drugs and what is known about the particular patient. There may be new information about a drug product, and there may also be changes in the patient's underlying condition. A physician must look for possible interactions between the drugs. Some reactions may be previously unknown or unexpected; some predictable and even tolerable. Some may dissipate the efficacy of other prescriptions and require modifications of dosage, and some may be fatal.

This book provides a "brown-bag" review of all the policies that directly and indirectly affect the medical device industry. The industry is the patient; the present policies are the prescriptions to which the patient reacts and responds. The book begins the diagnosis by asking key questions: What is the impact

of these multiple prescriptions on the patient? On the medical device industry? Are our prescriptions producing desirable outcomes without adverse and unexpected side effects? Is the result good health—in this case, meaningful innovation that is safe, efficacious, efficient, and cost effective? If not, what revisions in the treatment are appropriate? What is the patient's prognosis?

To answer these questions, we must take the patient's history, review each prescription to determine when and why it was offered, how it has changed over time, and how it relates to other prescriptions. After extensive review of these factors, the conclusion is mixed. It would, of course, be dramatic to report that government policy has destroyed device innovation; that is, the side effects are worse than the treatment. The reality is less definitive and more elusive. Until the 1990s, we have managed to muddle along, balancing myriad policy goals. Innovation flourished in the 1950s and 1960s because the dominant policies promoted both discovery of new products and their widespread distribution. Innovation has survived in the 1970s and 1980s despite safety and cost-control policies that inhibit innovation. The momentum of innovation was sustained in part because these policies were relatively unsuccessful in accomplishing their goals. Regulation was not fully implemented as intended, product liability had only sporadic effects on certain devices, and cost-containment strategies could not combat the pressure for distribution of benefits. If these policies had been successful, the industry would have been more adversely affected.

What does the future hold? We can expect renewed efforts to impose greater regulation and more effective cost controls in the 1990s. There is clear evidence of overdiffusion that government may try to control. As the marketplace attracts more equipment and as technicians and specialists arise to operate it, there can be supply-induced demand. In other words, if a facility has an MRI machine, it will find the patients necessary to operate the machine at a profit.

Policies to control supply costs can be on a collision course with the competing desire for more innovative devices. When public policies clash, there can be serious adverse reactions for innovation. The departure of most childhood vaccine producers in the wake of product liability suits and the impact of regulation

and liability on innovation in contraceptive research and development have been well documented.[8]

An excellent study on the impact of policies on contraceptive research is Luigi Mastroianni, Jr., Peter J. Donaldson, and Thomas T. Kane, eds., Developing New Contraceptives: Obstacles and Opportunities (Washington, D.C.: National Academy Press, 1990).

These cases represent potential adverse effects of public policy that we must seek to avoid with other devices.Muddling through the 1990s will not be acceptable. If we allow the situation to drift, we could become passive observers of unwanted outcomes. However, prevention of harmful effects is possible. Reforms that affirmatively balance competing values while eliminating conflicts and redundancies are presented here. Incremental accommodations can relieve some of the stresses on the system. But we must recognize the limits of medical device technology to cure all ills and the limits of public policy to solve social problems. Indeed, wise policy must address the larger moral questions of how we want to live and how we want to die: difficult questions we tend to avoid.

Medical devices are sufficiently important to warrant this case study on its own merits. However, this book also presents some of the broader concerns of public policy. It contributes to our understanding of policy proliferation. In recent years, the government has developed a propensity to intervene in the economy. For example, federal agricultural policy has been described as "a complex web of interventions covering output markets, input markets, trade, public good investments, renewable and exhaustible natural resources, regulation of externalities, education, and marketing and distribution of food products."[9]

Gordon C. Rausser, "Predatory Versus Productive Government: The Case of U.S. Agricultural Policies," Journal of Economic Perspectives 2 (Winter 1992).

A historical review of these public policies reveals the tension in the government's arguably contradictory actions.[10]Rausser defines some government policy as productive (reducing transaction costs and correcting market failures) and other policy as predatory (redistributing wealth without concern for growth or efficiency). His work seeks to explain and reconcile the perceived conflicts between the two approaches.

Public policy toward tobacco also illustrates this conflicting approach.[11]Congress protects the tobacco industry with a variety of favorable economic policies while simultaneously inhibiting the sale of tobacco products through television advertising limits and warning label requirements. Officials in several administrations have used their positions to condemn the marketing and the use of tobacco, and states and localities have severely restricted or banned smoking in public places.

Additional examples include nuclear power, the oil industry, and automobiles.[12]The government protects the automobile industry with negotiated trade restrictions while also regulating automobile design to promote safety and environmental goals. Other federal policies regarding gasoline pricing and supply, highway construction, and alternative forms of transportation all affect the infrastructure upon which the automobile depends. The 1989 Alaskan oil spill revealed both redundancies and regulatory gaps between federal and state authorities and illustrated the problems that can arise when various government institutions impose overlapping or conflicting demands.

Some degree of policy proliferation is inevitable in the American system. A pluralistic and democratic society tends toward incrementalism and compromise—features that encourage the proliferation of smaller interventions rather than comprehensive unilateral policies. Separation of powers at the federal level increases the likelihood of multiple sources of intervention, with each branch employing different tools and involving different constituencies. Our national government also shares power with the fifty states.[13]

There is a vast literature on federalism. For an overview, see David B. Walker, Toward a Functioning Federalism (Cambridge, Mass.: Winthrop Publishers, 1981). For a discussion of federalism and health, see Frank J. Thompson, "New Federalism and Health Care Policy: States and the Old Questions," Journal of Health Politics, Policy and Law 11 (1986): 647-669.

Thus, multiple layers of regulation have becomethe norm, not the exception, which is particularly true in the health care delivery and regulation system because of its complexity.

This book on medical device technology may have more general relevance to other areas of the economy. Of course, perfect generalizations cannot always be drawn from a specific case; each area has individual characteristics, and the variety of prescriptions will inevitably differ. However, the search for patterns and relationships in the evolution of various policies, and the study of private-sector responses to them, may be instructive for other areas of business-government relations. This chapter will first familiarize the reader with the patient and then establish the framework for the diagnostic process.

The Patient: The Medical Device Industry

Defining a Medical Device

The term medical device is often used synonymously with medical products, medical equipment and supplies, or medical technology. As a working definition, that of the Federal Food, Drug, and Cosmetic Act seems appropriate: a medical device is "an instrument, apparatus, implement, machine, contrivance, implant, in vitro reagent, or other similar or related article" that is intended for use in "the diagnosis of disease or other conditions [or the] cure, mitigation, treatment, or prevention of disease [or] intended to affect the structure or any function of the body of man, which does not achieve any of its principal intended purposes through chemical action within or on the body of man or other animals and which is not dependent upon being metabolized for the achievement of any of its principal purposes."[14]

21 U.S.C.sec. 321(h). This definition appears in the 1976 Medical Device Amendments to the Food, Drug, and Cosmetic Act, discussed at great length in chapter 5.

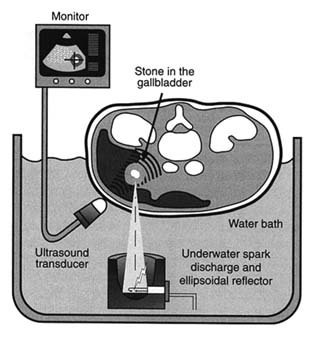

The definition is less complicated than it may first appear. Basically, devices include health care items other than drugs. Devices used for diagnosis of disease include X-ray machines, scopes for viewing parts of the body, including stethoscopes and bronchoscopes, and heart monitoring equipment, to name a few. Devices used for cure or treatment include scalpels and more complicated surgical tools, lithotripsy devices that crush kidney stones with ultrasonic waves, and balloon catheters that

clean out plaque formations on arteries. Examples of products that affect the structure or function of the body include artificial lenses to correct impaired vision, diaphragms or intrauterine devices used for birth control, and cardiac pacemakers to regulate heart rhythms.

The term medical devices can be distinguished from medical products, which includes both drugs and medical devices despite the distinct biomedical properties of each. Medical devices do include, but are not limited to, all nonpharmaceutical medical equipment and supplies. The term medical technology is even broader; the Office of Technology Assessment (OTA) has defined medical technology as "drugs, devices, and medical and surgical procedures used in medical care, and the organizational and supportive systems within which such care is provided."[15]

The Office of Technology Assessment is a research arm of Congress and produces technical reports and evaluations at its request.

Thus, devices are only one part of medical technology.Medical devices include thousands of products currently produced by over 3,500 U.S. firms. The term encompasses all supplies and equipment used in hospitals from bedpans to sophisticated monitoring devices, diagnostic products from X-rays and lab kits to complex innovations such as magnetic resonance imaging and ultrasound, and nonpharmaceutical treatment products from bandages to laser surgery equipment. Outside the hospital, medical devices are found in physicians' offices, from stethoscopes and blood pressure cuffs to automated desk-top blood analyzers and portable electrocardiograph (EKG) machines. Medical devices are also used in the home, including over-the-counter articles such as pregnancy test kits and heating pads.

Use of the definition crafted by the Food and Drug Administration (FDA) is noteworthy. First, in that this book explores the complex interrelationship between the government and the producers of devices, it is interesting that it was the FDA, a government agency, that first struggled with a precise definition of medical devices in order to formulate regulatory policy. Indeed, government helped to shape the industry by defining who was in or out for regulatory purposes. It is also interesting to note that the definition is residual, defining medical devices as products that are essentially nondrugs—reflecting the fact that the FDA regulated drugs long before medical devices were considered an

important part of health care.[16]

For a discussion of the history of the FDA, see chapter 2.

As the number and complexity of these nondrugs grew, a residual, catch-all category had to be carved out. This history explains why federal regulation treats the device category as a stepchild to the more clearly identifiable drug category.Inevitably, as in most definitions, there remain some gray areas. For example, the swimming pool that one may use to alleviate back pain generally would not be considered a device, at least for regulatory purposes. Sometimes confusion arises between the treatment process and the product because in many cases the treatment cannot be given without a particular medical device. For example, a patient cannot receive electroshock treatment without an electroshock device. Thus, some efforts to regulate the device were thinly disguised attempts to ban the treatment.

Unfortunately, the FDA definition, which works well enough for regulatory purposes, is different from the categories traditionally used by government to collect data on the industry that produces medical devices. The Census of Manufactures contains comprehensive industry statistics compiled by the U.S. Department of Commerce, Bureau of the Census. These data group medical products into five Standard Industrial Classifications (SIC codes), including designations for surgical and medical instruments, surgical appliances and supplies, X-ray, electromedical, and electrotherapeutic apparatus, dental equipment, and ophthalmic goods (see table 1). The data in these classifications capture an estimated 50 to 75 percent of products defined as medical devices by the FDA.[17]

Foote, "From Crutches to CT Scans," 4.

In the aggregate, these data, while imperfect, are sufficient to evaluate trends in the industry.Distinguishing Medical Products from Other Consumer Goods

Health care, like education, has never been officially considered a fundamental right under the U.S. Constitution. However, many of our health policies reflect the view that health care, like education, is a form of entitlement, a human right, rather than a privilege for the few. Social welfare programs have been designed to ensure that the elderly, the disabled, and the poor receive adequate, or at least minimal, health care services.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Clearly, medical products, including both drugs and devices, are deeply embedded in and inextricably linked to health care.

The social benefits associated with medical products distinguish them from other consumer goods. Economists use the term merit goods to describe products that have greater significance to society than other consumer goods.[18]

Karl A. Fox, Social Indicators (New York: John Wiley, 1974).

Unlike toasters, lawnmowers, or home computers, medical products, and their availability and affordability, raise humanitarian as well as economic issues. As a result, tension has existed between the economics of medical care and the social side of health. For example, at the turn of the century, entrepreneurial scientists took out patents and channeled the profits into further research, their supporting institutions, or their own pockets. However, traditionalmedical ethics made this practice questionable for health innovations. "Medicine was ensnared, as usual, in complications resulting from its peculiar combination of business and social service."[19]

Richard H. Shyrock, American Medical Research, Past and Present (New York: The Commonwealth Fund, 1947), 140.

We have not resolved this "peculiar" combination today. A recent article on financial issues for end-stage renal disease (ESRD) patients asks: "[I]s it a good idea to motivate patients … to choose their kidney dialysis units on the basis of price as well as medical quality, personal convenience, and other important factors?"[20]

Randall R. Bovbjerg, Philip J. Held, and Louis H. Diamond, "Provider-Patient Relations and Treatment Choice in an Era of Fiscal Incentives: The Case of the End-Stage Renal Disease Program," Milbank Quarterly 65 (1987): 177-202, 177.

It is difficult to imagine advising consumers of automobiles or financial services to ignore price solely in the interests of quality or convenience. In essence, important and long-standing social values undergird our views of health care and are reflected in the public policies that have emerged.Distinguishing Medical Devices from Drugs

Although pharmaceuticals provide the closest analogy to medical devices, we should not assume that drug and device issues are identical. Both drugs and devices are essential for the treatment and diagnosis of disease, but there are very important differences as well. Recalling the FDA definition, we know that drugs and devices operate through different biomedical mechanisms—most drugs are metabolized, and devices are not. The size and the composition of the marketplace and the range of producers differ in the two industries.

Of course, drugs and medical devices are often used in concert; for example, syringes and intravenous equipment deliver drugs directly into the body. Innovative skin patches allow gradual absorption of chemicals through the skin for various medical treatments, such as for motion sickness. Drugs and devices may offer alternative treatments. Patients may choose chemotherapy, a drug treatment, over surgery, a procedure, to eradicate cancer; women can choose among birth control pills (drugs), diaphragms, and IUDs (devices) to prevent pregnancy.

The governmental distinction between drugs and medical devices is more than a biomedical nicety. Device technologies range from lasers to computer systems to implanted materials. Because of that diversity, the nature of the medical equipment

and the costs associated with its purchase and use vary much more widely than for drugs. That is not to say that all pharmaceuticals are cheap. Indeed, recent introductions, particularly products based on biotechnology, carry high price tags. Tissue plasminogen activator (TPA), a drug for treatment of stroke victims, can cost as much as $5,000 a dose.[21]

In 1990, one dose of TPA cost $2,200, in contrast to the drug it, claims to replace, streptokinase, which cost $200 a dose. U.S. sales of TPA in 1989 were nearly $200 million. Karen Southwick, "Analysts Say TPA Use May Drop in Wake of Study," Healthweek, 26 March, 1990, 45.

Recent controversies over the high price of zidovudine (AZT), one of the few effective drugs for AIDS patients, raises the same issue. However, price variability in devices is still much broader. Devices range from simple products, such as crutches and bandages, to high-cost capital equipment, such as laboratory blood analyzers, lithotripsy equipment, and magnetic resonance imaging machines that cost several million dollars. Unlike drugs, such equipment may require maintenance, specially designed facilities, and specially trained operators and are subject to depreciation and deterioration. Other devices, such as components for kidney dialysis, raise important questions of reuse not relevant for drugs.In addition, the medical devices market may differ from the drug market. While hospital pharmacies generate sales, physicians generally order prescription drugs for individual patients, and consumers can purchase over-the-counter (OTC) drugs directly. The primary purchasers of medical equipment are hospitals, which rank ahead of physicians, ambulatory care centers, and individuals. Hospital purchasing patterns are extremely sensitive to changing reimbursement policies by third-party payers.

There are significant distinctions between drugs and devices on the supply side as well. Because of the range of technologies embedded in medical device production, a widely diverse group of firms consider themselves part of the medical device industry. In pharmaceuticals, the top one hundred companies market 90 percent of the drugs.[22]

Grabowski and Vernon, Regulation of Pharmaceuticals, 18.

The device industry is not nearly as concentrated: currently, over 3,500 device companies produce several thousand products. These companies range from those also known for drugs, such as Johnson & Johnson and Pfizer, to electronic giants, such as General Electric and Hewlett-Packard. In addition, there are many smaller companies concentrating exclusively on medical devices, such as Alza Corporation, whichspecializes in innovative drug delivery systems, and Medtronic, the leader in heart pacemakers and heart valves. Others have diversified. For example, SpaceLabs, now owned by Bristol-Myers/Squibb, applied technologies that were developed for the space program to produce a variety of monitoring and data-recording devices.

With all this diversity, it helps to consider medical device producers as a single industry. While this industry is many industries, in one sense, because no substitutability exists among all products, there is a commonality of buyers—hospitals, clinics, laboratories, physicians, and patients. However, there is a commonality in use in a broad sense—the products affect the function of the body and/or treat disease. Finally, it is the peculiar set of government health policies that have shaped the performance of this industry. All companies are, to some extent, offspring of the same set of policies, and this brings us full circle to the FDA definition of medical devices. The industry is unified by its relationship to government, both because it is defined by government and because of its symbiotic relationship to it.

The Process: A Diagnostic Framework

As this study explores the interaction between innovative medical devices and government, an understanding of both innovation and public policy is required.

Innovation Defined

Put most simply, innovation has been defined as certain technical knowledge about how to do things better than the existing state of the art.[23]

David J. Teece, "Profiting from Technological Innovation: Implications for Integration, Collaboration, Licensing, and Public Policy," Research Policy 15 (December 1986): 285-305.

Innovation can be seen broadly as a process leading to technical change, or the concept can be more narrowly applied to a specific new product or technique.The value of innovation to sustain economic growth and competitiveness and to improve the quality of life is not in question. Yet a clear understanding of how and why innovation occurs remains elusive. There is extensive literature, primarily in economics, on ways to model innovation.[24]

For example, in The Sources of Innovation (New York: Oxford University Press, 1988), Eric von Hippel questions the assumption that manufacturers are the primary source of innovation. He presents studies to show that the sources of innovation vary greatly, often coming from the suppliers of component parts or the product users. He then develops a theory of the functional sources of innovation. In another recent work, Economic Analysis of Product Innovation: The Case ofCT Scanners (Cambridge: Harvard University Press, 1990), Manuel Trajtenberg presents a method to estimate the benefits from product innovations that accrue to the consumer over time, focusing particularly on the interaction between innovation and diffusion. For those interested in pursuing the study of innovation, see also Nathan Rosenberg, Inside the Black Box: Technology and Economics (Cambridge: Cambridge University Press, 1982); and Edwin Mansfield, Industrial Research and Technological Innovation: An Econometric Analysis (New York: Norton, 1968). For a thoughtful effort to identify the components of innovation, see James J. Zwolenik, Science, Technology, and Innovation, prepared for the National Science Foundation (Columbus, Ohio: Battelle Columbus Labs, February 1973).

Despite these continuingefforts, however, other scholars of innovation have concluded that the forces that make for innovation are so numerous and intricate that they are not fully understood.[25]

John Jewkes et al., The Sources of Innovation (London: Macmillan, 1969).

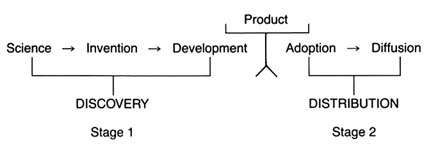

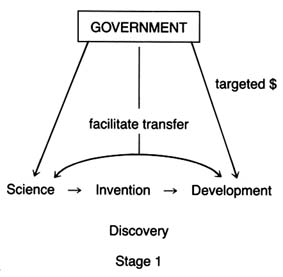

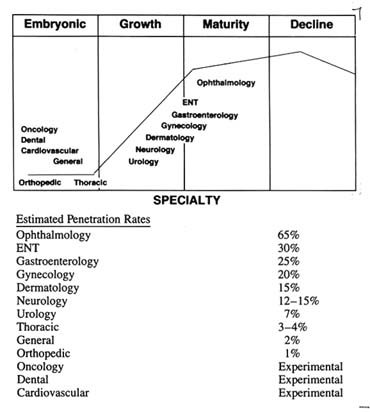

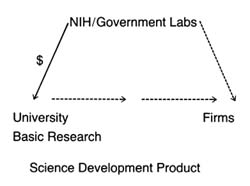

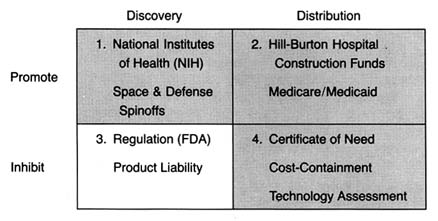

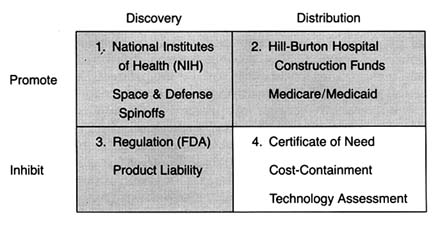

It is generally agreed, however, that certain stages are essential to the innovative process. Figure 2 illustrates the relevant stages.

The innovative process begins with science, which involves the systematic study of phenomena purely to add to the sum total of human knowledge.[26]

Shyrock, American Medical Research, 2.

Drawing on a science base, technology is directed toward use and has two phases: invention and development. Invention is the first confidence that something should work and involves testing to demonstrate that it does work. Development encompasses a wide range of activities by which technical methods are applied to the new invention so that the task is more precisely defined, the search more specific, and the chances of success more susceptible to measure. In John Jewkes's words, "Invention is the stage at which the scent is first picked up, development the stage at which the hunt is in full cry."[27]Jewkes, Sources, 28.

Finally, at the end of the development stage, technical considerations give way to market concerns. Experts have broken this stage into adoption and diffusion. Once a product has been adopted by the relevant decision makers, diffusion rates depend on a variety of market factors.

For purposes of simplicity, this book distills the stages into two main categories. Science, invention, and development lead to the creation of the product itself—described here as discovery . The second stage—decisions to adopt and use a product—is captured by the term distribution .

While simplification is helpful for discussion, it is important not to forget the complexity of the process. The existence of one element in the innovation stream does not ensure forward movement. Scientific understanding does not always produce applied technical development. However, the converse appears to be true: the sequence of innovation cannot occur without all elements. There can be no invention without science. For example, despite commitment to find a cure for cancer or AIDS, the necessary products await greater scientific understanding of the diseases. Moreover, the process is not perfectly linear. For many innovations, early prototypes are tried by physicians, who may

Figure 2. The stages of innovation.

encourage inventors to modify the product. Indeed, device innovation often is an iterative process involving inventors, physicians, and, occasionally, patients as well.

This innovative process can be illustrated by example. Infant mortality resulting from premature birth is a serious medical problem. Assume that physicians observed that pure oxygen is beneficial, but excessive oxygen may lead to blindness or death in the premature infant. The discovery stage would require knowledge of human physiology and of the role of oxygen. At the invention stage, the innovator would design a prototype for a mechanism to deliver the oxygen in appropriate amounts. That would require engineering expertise, such as a meter to measure oxygen flow and so on. The development stage would involve the production of the product.

Once the product is designed and constructed, the distribution stage becomes relevant. The inventor has to persuade physicians and/or hospitals to adopt the new invention, and diffusion measures how many products had been purchased to treat premature infants. Clearly, important factors arise that help or hinder the progress of the oxygen device along the innovation continuum. Questions of funding (who supports the inventor?), natural resources (is there an adequate supply of oxygen for the products?), and costs (can hospitals afford to buy the invention?) arise.

We can understand much of the medical device industry in relation to the traditional model of innovation. The industry is a business, subject to many of the same economic forces that confront all highly innovative industries. We need to know how technology is transferred, how firms are organized to facilitate

competitiveness, what economic strategies work, and so forth. However, in the medical device industry, as in many other areas of the economy, government policy has intervened at virtually every stage of the innovative process. Thus, to understand innovation, we must understand something of the public policy process as well.

Public Policy

Policymaking is the process of setting goals for the public good and implementing strategies to attain them. Every public policy is the outcome of an institutional decision. Public institutions, such as regulatory agencies or state courts, are themselves creatures of the political process. They have characteristics derived from their unique history and organization. In response to political, administrative, or legal pressures, public institutions can change. To understand public policy, then, we must be well grounded in the literature on bureaucracies, the judiciary, and the legislative process.[28]

The literature emerges primarily from the fields of political science, history, and law. It is impossible to provide a complete bibliography, but a good place to start an inquiry on how government agencies work is James Q. Wilson, Bureaucracy: What Government Agencies Do and Why They Do It (New York: Basic Books, 1989) and his earlier book, The Politics of Regulation (New York: Basic Books, 1980). See also James O. Freedman, Crisis and Legitimacy: The Administrative Process and American Government (Cambridge: Cambridge University Press, 1978). For discussion of how bureaucrats make decisions, see Eugene Bardach and Robert Kagan, Going By the Book: The Problem of Regulatory Unreasonableness (Philadelphia: Temple University Press, 1982); and Graham Allison, Essence of Decision (Boston: Little, Brown, 1971). For an understanding of the legislative process, begin with Eric Redman, The Dance of Legislation (New York: Simon and Schuster, 1973); and Hedrick Smith, The Power Game (New York: McGraw-Hill, 1988). For introduction to the judiciary, see Robert A. Carp and Ronald Stidham, Judicial Process in America (Washington, D.C.: Congressional Quarterly Press, 1990). For a discussion of litigation, see Jethro K. Lieberman, The Litigious Society (New York: Basic Books, 1981).

The public policies that affect medical device innovation must be understood in institutional, political, and legal contexts. History helps to establish the political and social context in which the policy intervention was introduced. For medical devices specifically, the institutions that set public policy include the National Institutes of Health (NIH), the FDA, the Medicare and Medicaid bureaucracies (the Health Care Financing Administration at the federal level for Medicare and many various departments of health in each state for Medicaid administration), the state and federal courts, and a variety of other assessment and regulatory entities.

Each policy must also be understood in terms of the social values that motivated the initial intervention. Like the concept of innovation, the concept of value is elusive. The term has been given so many meanings by economists, philosophers, and the public that no precise definition emerges.[29]

For an excellent discussion of values in relation to public policy, see generally William W. Lowrance, Modern Science and Human Values (New York: Oxford University Press, 1985).

For the purposes of our discussion, however, value is used to reflect social preferences—preferences communicated by the public or interest groups to decision makers for implementation through the public policy process.For example, the public values product safety. The public policy that manifests safety is government regulation of certain products, including medical devices. How well that value is carried out depends upon the institutional commitment to it (the politics of the FDA), the structure and the jurisdiction of the institution (what the law empowers FDA to do and not do), and the response of the regulated industry. As we begin to think about reform and change, it is imperative not to lose sight of the underlying values the original policies represent. The relevant questions include: Can we achieve those values more efficiently in other ways? Are the values outdated or superseded by newer ones?

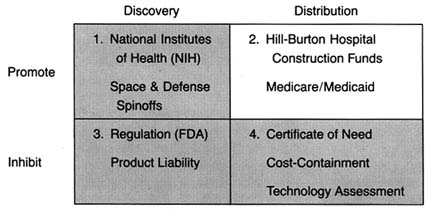

Again, for clarity, we must begin to simplify the complicated policy environment. Because we are interested in the impact of these policies on innovation, we can categorize them in relation to that process. Public policies, regardless of source or structure, tend either to promote innovation by accelerating the progress of a new product along the innovation stream or to inhibit the flow of innovation through barriers along the route.

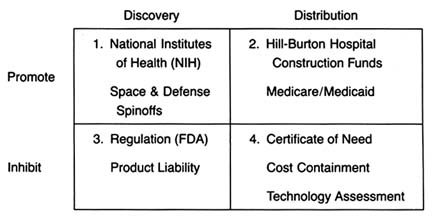

The Matrix

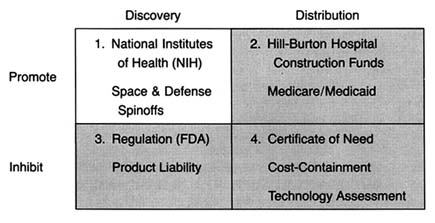

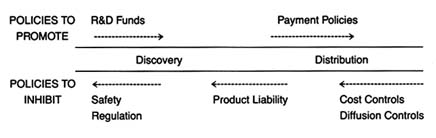

The development of a matrix helps to illustrate the interrelationship between public policy and innovation. In figure 3, the stages of the innovation continuum (from discovery to distribution) are on the horizontal axis. The two identified types of public policy (promote or inhibit) appear on the vertical axis.

Each box in the matrix contains examples of policies that reflect the coordinates. Thus, policies in box 1 promote innovation at the stage of discovery, and policies in box 4 inhibit innovation at the distribution stage. The matrix provides the organizing framework for the discussion in subsequent chapters.

Chapter 2 chronicles the evolution of innovation in the private sector and the preconditions for subsequent policy interventions. In the first half of this century, medical device technology and government institutions were quite independent and engaged in little meaningful interaction. Yet there were signs of change. Technological development was taking place in the private sector, and inventors overcame significant barriers to produce

Figure 3. The policy matrix.

a variety of innovations. Public attitudes about the government's role in the innovative process underwent important and perceptible shifts, and public institutions were established that later would play pivotal roles in the device industry. Interaction came later. In terms of both technology development and government policy, World War II provided the transition to the modern environment.

Part II (chapters 3 through 8) follows the chronology of the boxes in the matrix. Policy trends are easily identified, as the numbers reflect the last four decades. In general, the policies of the 1950s (box 1) were dominated by promotion of innovation at the discovery stage, and the 1960s (box 2) saw promotion at the distribution stage, with consequent benefits for both discovery and distribution. The 1970s and 1980s did not completely reverse these trends, in that the policies initiated earlier continued. However, new concerns led to efforts to inhibit innovation. In the 1970s (box 3), significant efforts to increase regulation inhibited discovery; in the 1980s (box 4), concern about cost containment led to policies to inhibit product distribution. The chronology is not exact because some of the regulatory antecedents appeared before the policies were enforced. (The FDA had authority to regulate medical devices as early as 1938; the extension of authority with regulatory teeth, however, came in 1976.)

Subsequent policies did not replace earlier ones. Rather, policies were layered one on top of the other, so that public policy affected innovation at every step. To help us understand the proliferation of policies, each intervention is discussed in relation to the politics of its creation and evolution, the interest groups involved, the goals of the policymakers, and the changes over time.

Each chapter in part II relates to a box in the matrix presented in figure 3. Chapter 3 addresses how government policy promotes discovery (box 1). The NIH is the primary federal organization charged with supporting biomedical research, which has been accomplished through grants to researchers, primarily in universities. Some recent initiatives, most notably the Artificial Heart Program (AHP) at NIH, which is modeled on the experiences of the space program, have targeted specific device technologies. Additionally, chapter 3 looks at government sponsored research in space and defense that has had some interesting effects on medical device technology. Also discussed are recent political efforts to realign the key research institutions—universities, government scientists, and the industry—so that medical technology is transferred from the basic science of the laboratory into the hands of product producers. The chapter evaluates these three initiatives in relation to the medical device industry.

Chapter 4 focuses on policies that promote distribution (box 2). Public policy has played a pivotal role in shaping the size and the composition of the medical device market. Federal and state governments have developed a complex set of policies to pay for health care services. After years of disinterest in health services, federal spending on the growth of the hospital infrastructure began after World War II. Chapter 4 describes how the public role expanded significantly with the enactment of Medicare and Medicaid in the 1960s. The primary health policy goal undergirding public payment is to increase access to health care for those previously excluded, including the elderly, the disabled, and the indigent. Although these programs did not directly address the medical device industry, their impact on that industry was dramatic. Government programs continue to inject billions of dollars into the medical marketplace every year, and

medical technologies are a primary beneficiary. The design of these payment policies dramatically and idiosyncratically affects the size of the market for particular medical technologies.

A few examples illustrate the point. According to some estimates, in 1982 the government paid for over 41 percent of all medical expenditures.[30]

U.S. Department of Commerce, Bureau of the Census, Statistical Abstract of the United States, 1985 (Washington, D.C.: GPO, 1984), table 143.

The payment structure favored hospital based technologies over nonhospital products. Intensive care units, full of new life-support and monitoring equipment, were virtually unknown in 1960; in 1984, they accounted for 8 percent of all hospital beds. Congress extended Medicare coverage for all end-stage renal disease (ESRD) patients in 1972. Kidney dialysis, virtually nonexistent in 1960, was used by 80,000 patients in 1984 at a cost to the government of $1.8 billion.[31]U.S. Congress, Office of Technology Assessment, Federal Policiesand the Medical Devices Industry (Washington, D.C.: GPO, October 1984).

The 1970s brought a new set of concerns to the policy arena—primarily product safety. Chapter 5 explores how the government inhibited discovery of medical devices through safety regulations (box 3). Regulation of medical devices is the primary vehicle for reducing risks of adverse reactions to these products. The federal government and, to a lesser extent, the states have recognized that certain medical products present unacceptable risks and require government intervention through safety and efficacy regulation.

Food and drug regulation dates back to the turn of the century. Congress extended the jurisdiction of the FDA to cover medical devices in 1938; the FDA acquired significantly more extensive regulatory powers under the 1976 Medical Device Amendments to the Federal Food, Drug, and Cosmetic Act, one of the many pieces of consumer protection legislation of the 1970s. The stated goal of these amendments was to "provide for the safety and effectiveness of medical devices intended for human use."[32]

Preamble to Medical Devices Amendment, Public Law 94-295, 90 Stat. 539.

The FDA's jurisdiction is over producers, and its regulations affect firms at the development stage. Cardiac pacemakers and intrauterine devices (IUDs) are used in this chapter to illustrate the impact of regulation on device technology. Because the law focuses on perceived risks associated with medical devices, the riskier the product, the more likely it will encounter the inhibiting forces of the FDA.Chapter 6 continues the discussion of policies that inhibit device discovery (box 3). Although it has antecedents in early

common-law rules, there was an explosion in product liability suits in the 1970s. Product liability seeks to inhibit the manufacture and use of devices if they are determined to be unsafe. However, state courts use completely different tools from FDA regulators to accomplish this substantially similar goal. Liability law in general has a less well-recognized, but clearly related, health mission. Its goal is to compensate individuals injured by defective products and to deter others from producing harmful products. It functions as a part of the health care system in that income to pay for medical costs, as well as noneconomic damage, is transferred from the producers of products to the consumers of products. It is essentially a form of insurance coverage for risk. The law includes both a compensatory and a safety function. The product liability system applies to all consumer products; medical products are included in this broad net. Product liability law, in terms of both the costs and availability of insurance and the consequences of lawsuits, can have a significant, indeed a crippling, impact on some producers.

Chapter 7 analyzes the series of recently imposed mechanisms that inhibit distribution of medical devices (box 4). These policies focus on cost containment rather than on safety, though some seek to control costs through evaluation of product quality. Concern about health care costs in the 1970s and 1980s has affected the momentum of federal and state payment programs. Efforts to restructure the system to control costs have had substantial effects on some segments of the medical device marketplace. Cost-containment strategies began with state based Certificate of Need programs and expanded to a variety of cost-control forms through technology assessment mechanisms. The goal of assessment processes is to ensure that only the "best" technologies are distributed—other technologies should be abandoned. Chapter 7 focuses on federal efforts to institutionalize technology assessment beyond the existing policymaking bodies. In addition, the new payment system under Medicare, known as the Prospective Payment System (PPS), was instituted to control the wildly escalating costs of Medicare. This program has created a new set of idiosyncratic effects on medical device technology.

Chapter 8 introduces the emerging issues of a global marketplace. These issues do not appear within the matrix because it is

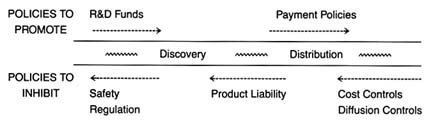

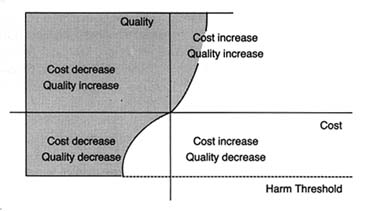

Figure 4. Policies affecting medical device innovation.

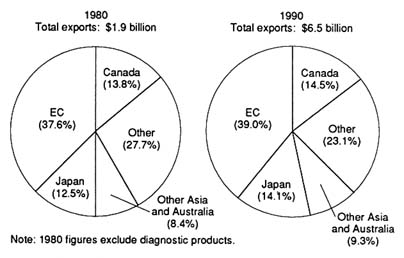

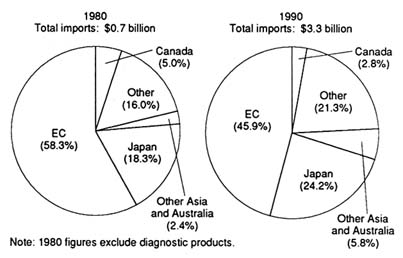

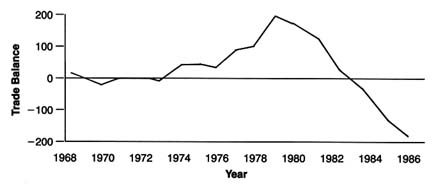

unclear whether the international market and the policies of foreign governments regarding medical technology will help domestic medical device producers (that is, promote distribution) or pose a competitive threat to domestic producers (that is, inhibit distribution). Through a brief look at three major markets—Japan, the emerging European Community, and China—these challenges will be assessed.

Part III provides a prognosis. Returning to the innovation continuum, the discussion in chapter 9 falls into three sections (figure 4). The first analyzes the present policy environment at both the discovery and the distribution stages, with illustrations of creative industry strategies that respond to policy incentives. The second section discusses pending policy reforms and their possible effect on the flow of new products. The third section discusses interactions among the various policies that are the inevitable consequences of policy proliferation. Some ways to improve the policy process are discussed.

Chapter 10 looks to the future. The contributions of medical devices to the fight against disease have been critical. However, there are dangers posed by misuse and overuse. The medical arms race must be managed with an understanding of the economic, political, and moral dimensions of medical technology.

The development and application of an analytical framework to the medical device industry only scratches the surface. Further empirical work is necessary to improve our understanding of medical technologies, of the process of innovation, and of the impact of government institutions on the private sector. It is hoped that the issues raised in this book will encourage this research. We must strive for rational policy reform grounded in an understanding of the values and goals of our health care system.

Finally, this study makes clear that policy proliferation is inherent in our political system and reflects the complexity of technology. The system has many benefits, but it also has costs and limitations. It is useful to step back and view the whole landscape to observe the dynamic interactions between the public and the private sectors. In the case of our patient—medical devices—reform may indeed be a matter of life and death.

2

Preconditions for Interaction

When my grandfather George had a stroke he was led into the house and put to bed, and the Red Men sent lodge brothers to sit with him to exercise the curative power of brotherhood…. Ida Rebecca called upon modern technology to help George. From a mail-order house she ordered a battery-operated galvanic device which applied the stimulation of low-voltage electrical current to his paralysed limbs…. In Morrisonville death was a common part of life. It came for the young as relentlessly as it came for the old. To die antiseptically in a hospital was almost unknown. In Morrisonville death still made house calls.

Russell Baker Growing Up

Journalist Russell Baker's description of his grandfather's death is typical of American health care in the 1920s.[1]

Russell Baker, Growing Up (New York: New American Library, 1982), 36-38.

The technological changes that characterized agricultural and industrial production had not yet come to medicine.[2]Stephen Toulmin, "Technological Progress and Social Policy: The Broader Significance of Medical Mishaps," in Mark Siegler et al., eds., Medical Innovation and Bad Outcomes: Legal, Social, and Ethical Responses (Ann Arbor: Health Administration Press, 1987), 22.

Physicians had limited knowledge, and commonly prescribed therapies were improved diets, more exercise, and cleaner environments, not the highly technological interventions customary today.[3]Selma J. Mushkin, Lynn C. Paringer, and Milton M. Chen, "Returns to Biomedical Research, 1900-1975: An Initial Assessment of Impacts on Health Expenditures," in Richard H. Egdahl and Paul M. Gertman, eds., Technology and the Quality of Health Care (Germantown, Md.: Aspen Systems, 1978).

Doctors held out little hope for treatment of most illnesses; consumers like Baker's grandmother often turned to folk remedies or miracle cures. Hospitals were shunned as places where destitute people without family went to die.[4]Charles E. Rosenberg, Caring for Strangers: The Rise of America's Hospital System (New York: Basic Books, 1987). This is a comprehensive study of American hospitals from 1800 to 1920.

Government played a negligible role in health care, and any costs of treatment would havebeen borne by the Baker family, or the treatment foregone if money were not available.

During the first four decades of the twentieth century, innovation in health sciences did occur. Important breakthroughs included improved aseptic surgery techniques, sulfa drugs, and vaccines to treat, and prevent the spread of, infectious diseases. The nascent medical device industry assisted in the advancement of medical science. There were increasingly sophisticated scopes for observation of internal bodily functions and advances in the laboratory equipment that permitted precise measurement of biochemical phenomena. However, quack devices proliferated in addition to legitimate innovations. Many fraudulent devices promising miracle cures capitalized on new discoveries in the fields of electricity and magnetism. The galvanic device purchased to treat Baker's grandfather was typical of popular quack products of the time. Unfortunately for the industry as a whole, these quack devices contributed to the public perception that medical devices were marginal to advanced medical care.

This chapter illustrates how individuals and firms overcame structural and scientific barriers to innovation in the private sector. Various private individuals and corporations managed to bridge these gaps. As a result, the medical device sector enjoyed modest and steady growth.

What is most interesting to the modern reader is the limited role that government played in medical technology innovation. However, seeds of the subsequent multiple roles of government were sown in this early period. By the eve of World War II, several public institutions had been created that would later significantly affect device development. The National Institutes of Health (NIH) would promote medical discovery; the Food and Drug Administration (FDA) would inhibit it. Government involvement in medical device distribution, however, did not occur until somewhat later. World War II accelerated the process of device innovation because of government involvement in the war effort; it both created a demand for medical innovations and overcame the public's reluctance to accept a government role in health policy.

The foundation for subsequent business-government interaction

in medical device innovation had been laid. To use our analogy, the preconditions for later prescriptions to treat our patient were set; full-blown medical intervention awaited the 1950s.

Taking the Patient's History: Industry Overview, 1900–1940

Barriers to Device Innovation in the Private Sector

Throughout the eighteenth and nineteenth centuries there was no continuous scientific tradition in America. Medical science depended upon foreign discoveries, which were dominated by Britain in the early nineteenth century, France at midcentury, and Germany in the last half of the century. The lack of research has been attributed to the dearth of certain conditions and facilities essential to medical studies.[5]

Richard H. Shyrock, American Medical Research, Past and Present (New York: The Commonwealth Fund, 1947).

The absence of dynamic basic science obviously limited the possibility of technological breakthroughs.However, the early part of the twentieth century witnessed a rise in private sector commitment to basic medical science. Philanthropists, intrigued by possibilities of improvements in health sciences, began to endow private research facilities. The first was the Rockefeller Institute, founded in New York City in 1902. A number of other philanthropic foundations were established in the Rockefeller's wake, including the Hooper Institute for Medical Research, the Phipps Institute in Philadelphia, and the Cushing Institute in Cleveland. This period has been called the "Era of Private Support."[6]

Ibid., 99.

These efforts improved the scientific research base in America, but it was still weak.Compounding this weakness were barriers between basic science and medical practice. During the nineteenth century, practicing physicians had little concern for, or interest in, medical research. Most doctors practiced traditional medicine, relying on their small arsenal of tried-and-true remedies. However, in the first decades of the twentieth century, medicine began to change profoundly, which led to organizational permutations through which the medical profession became more scientific

and rigorous.[7]

Paul Starr, The Social Transformation of American Medicine (New York: Basic Books, 1982), 79-145. This comprehensive study of American medical practice is a classic.

University medical schools began to grow as the need for integration of medical studies and basic sciences was acknowledged. Support for medical schools tied research to practice and rewarded doctors for research based medical education. By the end of this period, then, many of the problems associated with lack of basic medical science research had begun to be addressed. However, by modern standards, the commitment to research was extremely small.As our discussion of innovation revealed, basic scientific research must be linked to invention and development. In other words, there must be mechanisms by which technology is transferred from one stage in the innovation continuum to another. In the nineteenth century, there were serious gaps between basic medical science and applied engineering, which is an essential part of medical device development. American engineering education rarely involved research.[8]

Leonard S. Reich, The Making of American Industrial Research: Science and Business at GE and Bell, 1876-1926 (Cambridge: Cambridge University Press, 1985), 24.

Engineers were trained in technical schools but had few university contacts thereafter. Furthermore, manufacturers had little patience for the ivory towers of university science; they looked instead for profits in the marketplace. Engineering practitioners emphasized applying knowledge to the design of technical systems. Thus, engineers based in private companies were cautious about the developments of advanced technology, preferring to make gradual moves in known directions.[9]Ibid., 240.

Producers were slow to take advantage of any advances in research in the United States or abroad. Established industries tended to ignore science and to depend on empirical inventions for new developments.[10]John P. Swann, American Scientists and the Pharmaceutical Industry: Cooperative Research in Twentieth-Century America (Baltimore: Johns Hopkins University Press, 1988).

Patents for medical products did accelerate at the turn of the century, but a majority of them represented engineering shortcuts, not true innovations.[11]Shyrock, American Medical Research, 145.

A few very large corporations addressed this problem through the creation of in-house research laboratories that combined basic science and applied engineering. These institutions focused primarily on incremental product development, but innovative technologies did appear. Thus, while the gap between universities and corporations did not close, some firms became research-oriented through the establishment of independent laboratories. Companies with large laboratories included American

Telephone and Telegraph and General Electric (GE). One major medical device, the X-ray, emerged from GE's research lab. It will be described at greater length shortly.

Further barriers to product development arose from the uneasy relationship between product manufacturers and both university researchers and medical practitioners. A rash of patents were applied for following the development of aseptic surgery. Between 1880 and 1890, the Patent Office granted about 1,200 device patents. The debate that raged over patents of medical products illustrates the tension between health care and profits. Universities generally resisted patenting innovations for several reasons. They valued the free flow of scientific knowledge among scholars. They faced hard questions raised about how to allocate profits of the final result because of the interconnectedness of basic scientific research. They were also concerned that a profit orientation within academia would discourage the practice of sharing scientific knowledge for the betterment of all.[12]

The debate about the appropriate role of academic scientists within universities and about private sector profits continues to this day. For a discussion of the current debate and the relevant public policy on these issues, see chapter 3.

Innovations produced by medical inventors raised additional ethical problems. Often the physician-inventors stood by while commercial organizations exploited remedies based on their work. The medical profession had a traditional ethic against profiting from patents, and the debates about the ethics of patenting medical products continued through this period.[13]

Shyrock, American Medical Research, 143.

For example, the Jefferson County Medical Society of Kentucky stated that it "condemns as unethical the patenting of drugs or medical appliances for profit whether the patent be held by a physician or be transferred by him to some university or research fund, since the result is the same, namely, the deprivation of the needy sick of the benefits of many new medical discoveries through the acts of medical men."[14]Ibid., 122.

Dr. Chevalier Jackson, a noted expert in diseases of the throat, developed many instruments to improve diagnostic and surgical techniques. In his autobiography in 1938, he wrote: