Preferred Citation: Ames, Karyn R., and Alan Brenner, editors Frontiers of Supercomputing II: A National Reassessment. Berkeley: University of California Press, c1994 1994. http://ark.cdlib.org/ark:/13030/ft0f59n73z/

| Frontiers of Supercomputing IIA National ReassessmentEdited by Karyn R. Ames |

Preferred Citation: Ames, Karyn R., and Alan Brenner, editors Frontiers of Supercomputing II: A National Reassessment. Berkeley: University of California Press, c1994 1994. http://ark.cdlib.org/ark:/13030/ft0f59n73z/

PREFACE

In 1983, Los Alamos National Laboratory cosponsored the first Frontiers of Supercomputing conference and, in August 1990, cosponsored Frontiers of Supercomputing II: A National Reassessment, along with the National Security Agency, the Defense Advanced Research Projects Agency, the Department of Energy, the National Aeronautics and Space Administration, the National Science Foundation, and the Supercomputing Research Center.

Continued leadership in supercomputing is vital to U.S. technological progress, to domestic economic growth, to international industrial competitiveness, and to a strong defense posture. In the seven years that passed since the first conference, the U.S. was able to maintain this lead, although that lead has significantly eroded in several key areas. To help maintain and extend a leadership position, the 1990 conference aimed to facilitate a national reassessment of U.S. supercomputing and of the economic, technical, educational, and governmental barriers to continued progress. The conference addressed events and progress since 1983, problems in the U.S. supercomputing industry today, R&D priorities for high-performance computing in the U.S., and policy at the national level.

The challenges in 1983 were to develop computer hardware and software based on parallel processing, to build a massively parallel computer, and to write new schemes and algorithms for such machines. In the 1990s, the dream of computers with parallel processors is being realized. Some computers, such as Thinking Machines Corporation's Connection Machine, have more than 65,000 parallel processors and thus are massively parallel.

Participants and speakers at the 1990 conference included senior managers and policy makers, chief executive officers and presidents of companies, computer vendors, industrial users, U.S. senators, high-level federal officials, national laboratory directors, and renowned academicians.

The discussions published here incorporate much of the widely ranging, often spontaneous, and invariably lively exchanges that took place among this diverse group of conferees.

Specifically, Frontiers of Supercomputing II features presentations on the prospects for and limits of hardware technology, systems architecture, and software; new mathematical models and algorithms for parallel processing; the structure of the U.S. supercomputing industry for competition in today's international industrial climate; the status of U.S. supercomputer use; and highlights from the international scene. The proceedings conclude with a session focused on government initiatives necessary to preserve and extend the U.S. lead in high-performance computing.

Conferees faced a new challenge—a dichotomy in the computing world. The supercomputers of today are huge, centrally located, expensive mainframes that "crunch numbers." These computers are very good at solving intensive calculations, such as those associated with nuclear weapons design, global climate, and materials science. Some computer scientists consider these mainframes to be dinosaurs, and they look to the powerful new microcomputers, scientific workstations, and minicomputers as the "supercomputers" of the future. Today's desktop computers can be as powerful as early versions of the Cray supercomputers and are much cheaper than mainframes.

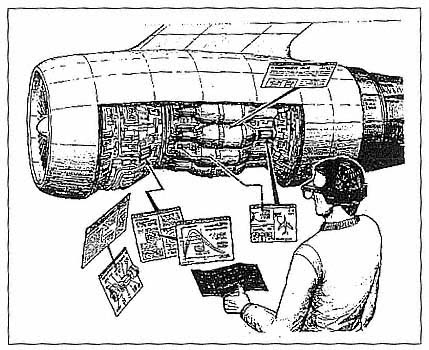

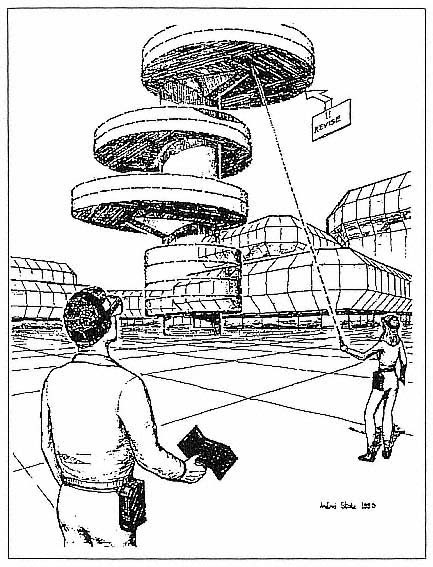

Conference participants expressed their views that the mainframes and the powerful new microcomputers have complementary roles. The challenge is to develop an environment in which the ease and usefulness of desktop computers are tied to the enormous capacity and performance of mainframes. Developments must include new user interfaces, high-speed networking, graphics, and visualization. Future users may sit at their desktop computers and, without knowing it, have their work parceled out to mainframes, or they may access databases around the world.

Los Alamos National Laboratory and the National Security Agency wish to thank all of the conference cosponsors and participants. The 1990 conference was a tremendous success. When the next Frontiers of Supercomputing conference convenes, the vision of a seamless, comprehensive computing environment may then be a reality. The challenge now is to focus the energies of government, industry, national laboratories, and universities to accomplish this task.

ACKNOWLEDGMENTS

The second Frontiers of Supercomputing conference held at Los Alamos National Laboratory, Los Alamos, New Mexico, August 20–24, 1990, was a tremendous success, thanks to the participants. As colleagues in high-performance computing, the conference participants avidly interacted with each other, formed collaborations and partnerships, and channeled their talents into areas that complemented each other's activities. It was a dynamic and fruitful conference, and the conference organizers extend special thanks to all of the participants.

Lawrence C. Tarbell, Jr., of the National Security Agency (NSA) was one of the conference organizers. The other conference organizer was William L. "Buck" Thompson, Special Assistant to the Director of Los Alamos National Laboratory. Members of the organizing committee from Los Alamos were Andy White and Gary Doolen. The organizing committee members from the NSA were Norman Glick and Byron Keadle; from the Supercomputing Research Center, Harlow Freitag; from the National Science Foundation, Tom Weber; from the Department of Energy, Norm Kreisman; from the Defense Advanced Research Projects Agency, Stephen Squires; and from the National Aeronautics and Space Administration, Paul Smith.

The success of this conference was in no small measure due to Donila Martinez of Los Alamos National Laboratory. She became the nerve center of northern New Mexico in finding places for conference participants to stay and in taking care of myriad conference preparation details.

Thanks also go to Kermith Speierman from NSA. He was the inspiration for the first Frontiers of Supercomputing conference in 1983 and was to a great extent the inspiration for this second conference, as well.

Nick Metropolis can clearly be called one of the true fathers of computing. He was in Los Alamos in the very early days, during the Manhattan Project, and he became the person in charge of building the MANIAC computer. He can tell you about the dawn of parallel processing.

You might think we are just entering that era. It actually began in Los Alamos about 50 years ago, when teams of people were operating mechanical calculators in parallel.

All recording and transcription of the conference was done by Steven T. Brenner, a registered professional reporter. Kyle T. Wheeler of the Computing and Communications Division at Los Alamos National Laboratory provided guidance on computing terminology.

Lisa Rothrock, an editor with B. I. Literary Services, in Los Alamos, New Mexico, gave much-needed editorial assistance for the consistency, clarity, and accuracy of these proceedings. Page composition and layout were done by Wendy Burditt, Chuck Calef, and Kathy Valdez, compositors at the Los Alamos National Laboratory Information Services Division. Illustrations were prepared for electronic placement by Linda Gonzales and Jamie Griffin, also of the Los Alamos National Laboratory Information Services Division.

1—

OPENING, BACKGROUND, AND QUESTIONS POSED FOR THIS CONFERENCE

Sig Hecker, Director of Los Alamos National Laboratory, welcomed attendees to the conference and introduced Senator Bingaman for a welcome speech. Kermith Speierman of the National Security Agency reviewed events since the last Frontiers of Supercomputing conference (1983), set the goals of the current conference, and charged the participants to meet those goals. The keynote address was given by Erich Bloch, who presented his perspective on the current status of supercomputing in the United States.

Session Chair

Larry Tarbell, National Security Agency

Buck Thompson, Los Alamos National Laboratory

Welcome

Sig Hecker

Siegfried S. Hecker is the Director of Los Alamos National Laboratory, in Los Alamos, New Mexico, a post he has held since January 1986. Dr. Hecker joined the Laboratory as a Technical Staff Member in the Physical Metallurgy Group in 1973 and subsequently served as Chairman of the Center for Materials Science and Division Leader of Materials Science and Technology. He began his professional career at Los Alamos in 1968 as a Postdoctoral Appointee. From 1970 to 1973, he worked as a Senior Research Metallurgist at General Motors Research Laboratories. He earned his Ph.D. in metallurgy from Case Western Reserve University in 1968.

Dr. Hecker received the Department of Energy's E. O. Lawrence Award for Materials Science in 1984. In 1985, he was cited by Science Digest as one of the year's top 100 innovators in science. In October of 1989, he delivered the Distinguished Lecture in Materials and Society for the American Society for Metals. The American Institute of Mining, Metallurgical, and Petroleum Engineers awarded him the James O. Douglas Gold Medal in 1990.

Among the scientific organizations in which Dr. Hecker serves is the Leadership/Applications to Practice Committee of the Metallurgical Society, the Board of Directors of the Council on Superconductivity for American Competitiveness, and the Board of Advisors of the Santa Fe Institute. Public-service agencies in which he is active include the

University of New Mexico Board of Regents, the Board of Directors of Carrie Tingley Hospital in Albuquerque, the Los Alamos Area United Way Campaign, and the Los Alamos Ski Club, of which he is President.

Welcome to Los Alamos and to New Mexico. I think most of you know that it was in 1983—in fact, seven years ago this week—that we held the first Frontiers of Supercomputing conference here at Los Alamos under the sponsorship of Los Alamos National Laboratory and the National Security Agency (NSA) to assess the critical issues that face supercomputing. Today we are here to make a national reassessment of supercomputing. The expanded number of sponsors alone, I think, reflects the increased use of supercomputing in the country. The sponsors of this conference are NSA, Los Alamos National Laboratory, the Defense Advanced Research Projects Agency, the Department of Energy, the National Science Foundation, and the Supercomputing Research Center.

I want to make a few brief remarks, both about the conference, as well as computing at the Laboratory. I found it very interesting to go back and look through the first Frontiers of Supercomputing book. Several things haven't changed at all since the last conference. K. Speierman, in his conference summary, pointed out very nicely that increased computational power will allow us to make significant advances in science, particularly in nonlinear phenomena. Supercomputing, we pointed out at the first conference, also will improve our technology and allow us to build things more efficiently. That certainly remains ever so true today. Indeed, leadership in high-performance computing is obviously vital to U.S. military and economic competitiveness.

In the preface to Frontiers of Supercomputing (Metropolis et al. 1986), the conference participants indicated that it will take radical changes in computer architecture, from single to massively parallel processors, to keep up with the demand for increased computational power. It was also fascinating that the authors at that time warned that the importance of measures to more effectively use available hardware cannot be overemphasized, namely measures such as improved numerical algorithms and improved software. Once again, these comments remain ever so true today.

However, there are a number of things that have changed since 1983. I think we have seen a substantial increase in parallel processing. At the Laboratory today, the CRAY Y-MPs are the workhorses for our computations. We have also made great progress in using the massively parallel

Connection Machines, from Thinking Machines Corporation, to solve demanding applications problems.

I think all the way around, in the country and in the world, we have seen a revolution in the computing environment, namely, that the personal computer has come into its own—to the tune of about 50 million units in the decade of the 1980s. That number includes one user, my eight-year-old daughter, who now has computational power at her fingertips that scientists wish they would have had a decade or two ago. Also, the trend toward high-power scientific workstations, networking, and ultra-high-speed graphics will forever change the way we do computing.

Another thing that hasn't changed, however, is the insatiable appetite of scientists who want more and more computing power. Seven years ago we had a few CRAY-1s at Los Alamos, and, just to remind you, that was only seven years after Seymour Cray brought serial number 1 to Los Alamos back in 1976. Today we have about 65 CRAY-1 equivalents, plus a pair of Connection Machine 2s. Nevertheless, I constantly hear the cry for more computational horsepower. At Los Alamos, that need is not only for the defense work we do but also for many other problems, such as combustion modeling or enhanced oil recovery or global climate change or how to design materials from basic principles.

However, a fundamental change has occurred. I think today, to remain at the forefront of computing, we can't simply go out and buy the latest model of supercomputer. We clearly will have to work smarter, which means that we'll have to work much more in conjunction with people at universities and with the computer and computational equipment manufacturers.

Therefore, I look forward to this reassessment in Frontiers of Supercomputing II, and I think it will be an interesting week. Typically, it's the people who make a conference. And as I look out at the audience, I feel no doubt that this will be a successful conference.

It is my pleasure this morning to introduce the person who will officially kick off the conference. We are very fortunate to have Senator Jeff Bingaman of New Mexico here. Senator Bingaman also played a similar role at the conference in 1983, shortly after he was elected to the United States Senate.

Senator Bingaman grew up in Silver City, a little town in the southern part of the state. He did his undergraduate work at Harvard and received a law degree from Stanford University. He was Attorney General for the State of New Mexico before being elected to the United States Senate.

I have had the good fortune of getting to know Senator Bingaman quite well in the past five years. He certainly is one of the greatest

advocates for science and technology in the United States Congress. He serves on the Senate Armed Services Committee and also on the Senate Energy and Natural Resources Committee. On the Armed Services Committee, he heads the Subcommittee on Defense Industry and Technology. In both of those committees, he has been a strong advocate for science and technology in the nation, and particularly in Department of Defense and Department of Energy programs. In the Armed Services subcommittee, he spearheaded an effort to focus on our critical technologies and competitiveness, both from a military, as well as an economic, standpoint. And of course, there is no question that supercomputing is one of those critical technologies.

Thus, it is most appropriate to have Senator Bingaman here today to address this conference, and it's my honor and pleasure to welcome him to Los Alamos.

Reference

Frontiers of Supercomputing , N. Metropolis, D. H. Sharp, W. J. Worlton, and K. R. Ames, Eds., University of California Press, Berkeley, California (1986).

Supercomputing As a National Critical Technologies Effort

Senator Jeff Bingaman

Senator Jeff Bingaman (D-NM) began his law career as Assistant New Mexico Attorney General in 1969. In 1978 he was elected Attorney General of New Mexico. Jeff was first elected to the United States Senate in 1982 and reelected in 1988. In his two terms, Jeff has focused on restoring America's economic strength, preparing America's youth for the 21st century, and protecting our land, air, and water for future generations.

Jeff was raised in Silver City, New Mexico, and attended Harvard University, graduating in 1965 with a bachelor's degree in government. He then entered Harvard University Law School, graduating in 1968. Jeff served in the Army Reserves from 1968 to 1974.

It is a pleasure to be here and to welcome everyone to Los Alamos and to New Mexico.

I was very fortunate to be here seven years ago, when I helped to open the first Frontiers of Supercomputing conference on a Monday morning in August, right here in this room. I did look back at the remarks I made then, and I'd like to cite some of the progress that has been made since then and also indicate some of the areas where I think we perhaps are still in the same ruts we were in before. Then I'll try to put it all in a little broader context of how we go about defining a rational technology policy for the entire nation in this post-Cold War environment.

Back in 1983, I notice that my comments then drew particular attention to the fact that Congress was largely apathetic and inattentive to the challenge that we faced in next-generation computing. The particular fact or occurrence that prompted that observation in 1983 was that the Defense Advanced Research Projects Agency's (DARPA's) Strategic Computing Initiative, which was then in its first year, had been regarded by some in Congress as a "bill payer"—as one of those programs that you can cut to pay for supposedly higher-priority strategic weapons programs. We had a fight that year while I worked with some people in the House to try to maintain the $50 million request that the Administration had made for funding the Strategic Computing Program for DARPA.

Today, I do think that complacency is behind us. Over the past seven years, those of you involved in supercomputing/high-performance supercomputing have persuasively made the case both with the Executive Branch and with the Congress that next-generation computers are critical to the nation's security and to our economic competitiveness. More importantly, you have pragmatically defined appropriate roles for government, industry, and academia to play in fostering development of the key technologies needed for the future and—under the leadership of the White House Science Office, more particularly, of the Federal Coordinating Committee on Science, Engineering, and Technology (FCCSET)—development of an implementation plan for the High Performance Computing Initiative.

That initiative has been warmly received in Congress. Despite the fact that we have cuts in the defense budget this year and will probably have cuts in the next several years, both the Senate Armed Services Committee and the House Armed Services Committee have authorized substantial increases in DARPA's Strategic Computing Program. In the subcommittee that I chair, we increased funding $30 million above the Administration's request, for a total of $138 million this next year. According to some press reports I've seen, the House is expected to do even better.

Similarly, both the Senate Commerce Committee and the Senate Energy Committee have reported legislation that provides substantial five-year authorizations for NSF at $650 million, for NASA at $338 million, and for the Department of Energy (DOE) at $675 million, all in support of a national high-performance computing program. Of course, the National Security Agency and other federal agencies are also expected to make major contributions in the years ahead.

Senator Al Gore deserves the credit for spearheading this effort, and much of what each of the three committees that I've mentioned have done follows the basic blueprint laid down in S. B. 1067, which was a bill introduced this last year that I cosponsored and strongly supported. Mike Nelson, of Senator Gore's Commerce Committee staff, will be spending the week with you and can give you better information than I can on the prospects in the appropriations process for these various authorizations.

One of the things that has struck me about the progress in the last seven years is that you have made the existing institutional framework actually function. When I spoke in 1983, I cited Stanford University Professor Edward Feigenbaum's concern (expressed in his book The Fifth Generation ) that the existing U.S. institutions might not be up to the challenge from Japan and his recommendation that we needed a broader or bolder institutional fix to end the "disarrayed and diffuse indecision" he saw in this country and the government. I think that through extraordinary effort, this community, that is, those of you involved in high-performance supercomputing, have demonstrated that existing institutions can adapt and function. You managed to make FCCSET work at a time when it was otherwise moribund. You've been blessed with strong leadership in some key agencies. I'd like to pay particular tribute to Craig Fields at DARPA and Erich Bloch at NSF. Erich is in his last month of a six-year term as the head of NSF, and I believe he has done an extraordinary job in building bridges between the academic world, industry, and international laboratories. His efforts to establish academic supercomputer centers and to build up a worldwide high-data-rate communications network are critical elements in the progress that has been made over the last seven years. Of course, those efforts were not made and those successes were not accomplished without a lot of controversy and complaints from those who felt their own fiefdoms were challenged.

On the industrial side, the computer industry has been extraordinarily innovative in establishing cooperative institutions. In 1983, both the Semiconductor Research Cooperative (SRC) and Microelectronics and Computer Technology Corporation (MCC) were young and yet unproved. Today SRC and MCC have solid track records of achievement, and MCC has had the good sense to attract Dr. Fields to Austin after his dismissal as head of DARPA, apparently for not pursuing the appropriate ideological line.

More recently, industry has put together a Computer Systems Policy Project, which involves the CEOs of our leading computer firms, to think through the key generic issues that face the industry. Last month, the R&D directors of that group published a critical technologies report outlining the key success factors that they saw to be determinative of U.S. competitiveness in the 16 critical technologies for that industry.

As I see it, all of these efforts have been very constructive and instructive for the rest of us and show us what needs to be done on a broader basis in other key technologies.

The final area of progress I will cite is the area I am least able to judge, namely, the technology itself. My sense is that we have by and large held our own as a nation vis-à-vis the rest of the world in competition over the past seven years. I base this judgment on the Critical Technology Plan—which was developed by the Department of Defense (DoD), in consultation with DOE—and the Department of Commerce's Emerging Technologies Report, both of which were submitted to Congress this spring. According to DoD, we are ahead of both Japan and Europe in parallel computer architectures and software producibility. According to the Department of Commerce report, we are ahead of both Japan and Europe in high-performance computing and artificial intelligence. In terms of trends, the Department of Commerce report indicates that our lead in these areas is accelerating relative to Europe but that we're losing our lead in high-performance computing over Japan and barely holding our lead in artificial intelligence relative to Japan.

Back in 1983, I doubt that many who were present would have said that we'd be as well off as we apparently are in 1990. There was a great sense of pessimism about the trends, particularly relative to Japan. The Japanese Ministry of International Trade and Industry (MITI) had launched its Fifth Generation Computer Project by building on their earlier national Superspeed Computer Project, which had successfully brought Fujitsu and Nippon Electric Corporation to the point where they were challenging Cray Research, Inc., in conventional supercomputer hardware. Ed Feigenbaum's book and many other commentaries at the time raised the specter that this technology was soon to follow consumer electronics and semiconductors as an area of Japanese dominance.

In the intervening years, those of you here and those involved in this effort have done much to meet that challenge. I'm sure all of us realize that the challenge continues, and the effort to meet it must continue. While MITI's Fifth Generation Project has not achieved its lofty goals, it has helped to build an infrastructure second only to our own in this critical field. Japanese industry will continue to challenge the U.S. for first

place. Each time I've visited Japan in the last couple of years, I've made it a point to go to IBM Japan to be briefed on the progress of Japanese industry, and they have consistently reported solid progress being made there, both in hardware and software.

I do think we have more of a sense of realism today than we had seven years ago. Although there is no room for complacency in our nation about the efforts that are made in this field, I think we need to put aside the notion that the Japanese are 10 feet tall when it comes to developing technology. Competition in this field has helped both our countries. In multiprocessor supercomputers and artificial intelligence, we've spawned a host of new companies over the past seven years in this country. Computers capable to 1012 floating-point operations per second are now on the horizon. New products have been developed in the areas of machine vision, automatic natural-language understanding, speech recognition, and expert systems. Indeed, expert systems are now widely used in the commercial sector, and numerous new applications have been developed for supercomputers.

Although we are not going to be on top in all respects of supercomputing, I hope we can make a commitment to remain first overall and to not cede the game in any particular sector, even those where we may fall behind.

I have spent the time so far indicating progress that has been made since the first conference. Let me turn now to just a few of the problems I cited in 1983 and indicate some of those that still need to be dealt with.

The most fundamental problem is that you in the supercomputing field are largely an exception to our technology policy-making nationwide. You have managed through extraordinary effort to avoid the shoals of endless ideological industrial-policy debate in Washington. Unfortunately, many other technologies have not managed to avoid those shoals.

Let me say up front that I personally don't have a lot of patience for these debates. It seems to me our government is inextricably linked with industry through a variety of policy mechanisms—not only our R&D policy but also our tax policy, trade policy, anti-trust policy, regulatory policy, environmental policy, energy policy, and many more. The sum total of these policies defines government's relationship with each industry, and the total does add up to an industrial policy. This is not a policy for picking winners and losers among particular firms, although obviously we have gone to that extent in some specific cases, like the bailouts of Lockheed and Chrysler and perhaps in the current debacle in the savings and loan industry.

In the case of R&D policy, it is clearly the job of research managers in government and industry to pick winning technologies to invest in. Every governor in the nation, of both political parties, is trying to foster winning technologies in his or her state. Every other industrialized nation is doing the same. I don't think anybody gets paid or promoted for picking losing technologies.

Frankly, the technologies really do appear to pick themselves. Everyone's lists of critical technologies worldwide overlap to a tremendous degree. The question for government policy is how to insure that some U.S. firms are among the world's winners in the races to develop supercomputers, advanced materials, and biotechnology applications—to cite just three examples that show up on everybody's list.

In my view, the appropriate role for government in its technology policy is to provide a basic infrastructure in which innovation can take place and to foster basic and applied research in critical areas that involve academia, federal laboratories, and industry so that risks are reduced to a point where individual private-sector firms will assume the remaining risk and bring products to market. Credit is due to Allan D. Bromley, Assistant to the President for Science and Technology, for having managed to get the ideologues in the Bush Administration to accept a government role in critical, generic, and enabling technologies at a precompetitive stage in their development. He has managed to get the High Performance Computing Initiative, the Semiconductor Manufacturing Technology Consortium, and many other worthwhile technology projects covered by this definition.

Frankly, I have adopted Dr. Bromley's vocabulary—"critical, generic, enabling technologies at a precompetitive stage"—in the hope of putting this ideological debate behind us. In Washington we work studiously to avoid the use of the term "industrial policy," which I notice we used very freely in 1983. My hope is that if we pragmatically go about our business, we can get a broad-based consensus on the appropriate roles for government, industry, and academia in each of the technologies critical to our nation's future. You have, as a community, done that for high-performance supercomputing, and your choices have apparently passed the various litmus tests of a vast majority of members of both parties, although there are some in the Heritage Foundation and other institutions who still raise objections.

Now we need to broaden this effort. We need to define pragmatically a coherent, overall technology policy and tailor strategies for each critical technology. We need to pursue this goal with pragmatism and flexibility, and I believe we can make great headway in the next few years in doing so.

Over the past several years, I have been attempting to foster this larger, coherent national technology policy in several ways. Initially, we placed emphasis on raising the visibility of technology issues within both the Executive Branch and the Congress. The Defense Critical Technology Plan and the Emerging Technologies Report have been essential parts of raising the visibility of technological issues. Within industry I have tried to encourage efforts to come up with road maps for critical technologies, such as those of the Aerospace Industries Association, John Young's Council on Competitiveness, and the Computer Systems Policy Project. It is essential that discussion among government, industry, and academia be fostered and that the planning processes be interconnected at all levels, not just at the top.

At the top of the national critical technologies planning effort, I see the White House Science Office. Last year's Defense Authorization Bill established a National Critical Technologies Panel under Dr. Bromley, with representation from industry, the private sector, and government. They recently held their first meeting, and late this year they will produce the first of six biennial reports scheduled to be released between now and the year 2000. In this year's defense bill, we are proposing to establish a small, federally funded R&D center under the Office of Science and Technology Policy, which would be called the Critical Technologies Institute. The institute will help Dr. Bromley oversee the development of interagency implementation plans under FCCSET for each of the critical technologies identified in the national critical technologies reports (much like the plan on high-performance computing issued last year). Dr. Ed David, when he was White House Science Advisor under President Nixon, suggested to me that the approach adopted by the Federally Funded Research and Development Centers was the only way to insure stability and continuity in White House oversight of technology policy. After looking at various alternatives, I came to agree with him.

Of course, no structure is a substitute for leadership. I believe that the policy-making and reporting structure that we've put in place will make the job of government and industry leaders easier. It will ensure greater visibility for the issues, greater accountability in establishing and pursuing technology policies, greater opportunity to connect technology policy with the other government policies that affect the success or failure of U.S. industry, and greater coherence among research efforts in government, industry, and academia. That is the goal that we are pursuing.

I think we will find as we follow this path that no single strategy will be appropriate to each technology or to each industry. What worked for high-performance supercomputing will not transfer readily to advanced

materials or to biotechnology. We will need to define appropriate roles in each instance in light of the existing government and industry structure in that technology. In each instance, flexibility and pragmatism will need to be the watchwords for our efforts.

My hope is that if another conference like this occurs seven years from now, we will be able to report that there is a coherent technology policy in place and that you in this room are no longer unique as having a White House-blessed implementation plan.

You may not feel you are in such a privileged position at this moment compared to other technologies, and you know better than I the problems that lie ahead in ensuring continued American leadership in strategic computing. I hope this conference will identify the barriers that remain in the way of progress in this field. I fully recognize that many of those barriers lie outside the area of technology policy. A coherent technology strategy on high-performance computing is necessary but clearly not sufficient for us to remain competitive in this area.

I conclude by saying I believe that you, and all others involved in high-performance supercomputing, have come a great distance in the last seven years and have much to be proud of. I hope that as a result of this conference you will set a sound course for the next seven years.

Thank you for the opportunity to meet with you, and I wish you a very productive week.

Goals for Frontiers of Supercomputing II and Review of Events since 1983

Kermith Speierman

At the time of the first Frontiers of Supercomputing conference in 1983, Kermith H. "K." Speierman was the chief scientist at the National Security Agency (NSA), a position he held until 1990. He has been a champion of computing at all levels, especially of supercomputing and parallel processing. He played a major role in the last conference. It was largely through his efforts that NSA developed its parallel processing capabilities and established the Supercomputing Research Center.

I would like to review with you the summary of the last Frontiers of Supercomputing conference in 1983. Then I would like to present a few representative significant achievements in high-performance computing over this past seven years. I have talked with some of you about these achievements and I appreciate your help. Last, I'd like to talk about the goals of this conference and share with you some questions that I think are useful for us to consider during our discussions.

1983 Conference Summary

In August of 1983, at the previous conference, we recognized that there is a compelling need for more and faster supercomputers. The Japanese , in fact, have shown that they have a national goal in supercomputation and can achieve effective cooperation between government, industry, and academia in

their country. I think the Japanese shocked us a little in 1983, and we were a bit complacent then. However, I believe we are now guided more by our needs, our capabilities, and the idea of having a consistent, balanced program with other sciences and industry. So I think we've reached a level of maturity that is considerably greater than we had in 1983. I think U.S. vendors are now beginning, as a result of events that have gone on during this period, to be very serious about massively parallel systems, or what we now tend to call scalable parallel systems.

The only evident approach to achieve large increases over current supercomputer speeds is through massively parallel systems. However, there are some interesting ideas in other areas like optics that are exciting. But I think for this next decade we do have to look very hard at the scalable parallel systems.

We don't know how to use parallel architectures very well. The step from a few processors to large numbers is a difficult problem. It is still a challenge, but we now know a great deal more about using parallel processors on real problems. It is still very true that much work is required on algorithms, languages, and software to facilitate the effective use of parallel architectures .

It is also still true that the vendors need a larger market for supercomputers to sustain an accelerated development program . I think that may be a more difficult problem now than it was in 1983 because the cost of developing supercomputers has grown considerably. However, the world market is really not that big—it is approximately a $1 billion-per-year market. In short, the revenue base is still small.

Potential supercomputer applications may be far greater than current usage indicates. In fact, I think that the number of potential applications is enormous and continues to grow.

U.S. computer companies have a serious problem buying fast, bipolar memory chips in the U.S. We have to go out of the country for a lot of that technology. I think our companies have tried to develop U.S. sources more recently, and there has been some success in that. Right now, there is considerable interest in fast bipolar SRAMs. It will be interesting to see if we can meet that need in the U.S.

Packaging is a major part of the design effort. As speed increases, you all know, packaging gets to be a much tougher problem in almost a nonlinear way. That is still a very difficult problem.

Supercomputers are systems consisting of algorithms, languages, software, architecture, peripherals, and devices. They should be developed as systems that recognize the critical interaction of all the parts. You have to deal with a whole system if you're going to build something that's usable.

Collaboration among government, industry, and academia on supercomputer matters is essential to meet U.S. needs. The type of collaboration that we have is important. We need to find collaboration that is right for the U.S. and takes advantage of the institutions and the work patterns that we are most comfortable with. As suggested by Senator Jeff Bingaman in his presentation during this session, the U.S. needs national supercomputer goals and a strategic plan to reach those goals .

Events in Supercomputing since 1983

Now I'd like to talk about representative events that I believe have become significant in supercomputing since 1983. After the 1983 conference, the National Security Agency (NSA) went to the Institute for Defense Analyses (IDA) and said that they would like to establish a division of IDA to do research in parallel processing for NSA. We established the Supercomputing Research Center (SRC), and I think this was an important step.

Meanwhile, NSF established supercomputing centers, which provided increased supercomputer access to researchers across the country. There were other centers established in a number of places. For instance, we have a Parallel Processing Science and Technology Center that was set up by NSF at Rice University with Caltech and Argonne National Laboratory. NSF now has computational science and engineering programs that are extremely important in computational math, engineering, biology, and chemistry, and they really do apply this new paradigm in which we use computational science in a very fundamental way on basic problems in those areas.

Another event since 1983, scientific visualization, has become a really important element in supercomputing.

The start up of Engineering Technology Associates Systems (ETA) was announced at the 1983 banquet speech by Bill Norris. Unfortunately, ETA disbanded as an organization in 1989.

In 1983, Denelcor was a young organization that was pursuing an interesting parallel processing structure. Denelcor went out of business, but their ideas live on at Tera Computer Company, with Burton Smith behind them.

Cray Research, Inc., has trifurcated into three companies since 1983. One of those, Supercomputing Systems, Inc., is receiving significant technological and financial support from IBM, which is a very positive direction.

At this time, the R&D costs for a new supercomputer chasing very fast clock times are $200 or $300 million. I'm told that's about 10 times as much as it was 10 years ago.

Japan is certainly a major producer of supercomputers now, but they haven't run away with the market. We have a federal High Performance Computing Initiative that was published by the Office of Science and Technology Policy in 1989, and it is a result of the excellent interagency cooperation that we have. It is a good plan and has goals that I hope will serve us well.

The Defense Advanced Research Projects Agency's Strategic Computing Program began in 1983. It has continued on and made significant contributions to high-performance computing.

We now have the commercial availability of massively parallel machines. I hope that commercial availability of these machines will soon be a financial success.

I believe the U.S. does have a clear lead in parallel processing, and it's our job to take advantage of that and capitalize on it. There are a significant number of applications that have been parallelized, and as that set of applications grows, we can be very encouraged.

We now have compilers that produce parallel code for a number of different machines and from a number of different languages. The researchers tell me that we have a lot more to do, but there is good progress here. In the research community there are some new, exciting ideas in parallel processing and computational models that should be very important to us.

We do have a much better understanding now of interconnection nets and scaling. If you remember back seven years, the problem of interconnecting all these processors was of great concern to all of us.

There has been a dramatic improvement in microprocessor performance, I think primarily because of RISC architectures and microelectronics for very-large-scale integration. We have high-performance workstations now that are as powerful as CRAY-1s. We have special accelerator boards that perform in these workstations for special functions at very high rates. We have minisupercomputers that are both vector and scalable parallel machines. And UNIX is certainly becoming a standard for high-performance computing.

We are still "living on silicon." As a result, the supercomputers that we are going to see next are going to be very hot. Some of them may be requiring a megawatt of electrical input, which will be a problem.

I think there is a little flickering interest again in superconducting electronics, which provides a promise of much smaller delay-power products, which in turn would help a lot with the heat problem and give us faster switching speeds.

Conference Goals

Underlying our planning for this conference were two primary themes or goals. One was the national reassessment of high-performance computing—that is, how much progress have we made in seven years? The other was to have a better understanding of the limits of high-performance computing. I'd like to preface this portion of the discussion by saying that not all limits are bad. Some limits save our lives. But it is very important to understand limits. By limits, I mean speed of light, switching energy, and so on.

The reassessment process is one, I think, of basically looking at progress and understanding why we had problems, why we did well in some areas, and why we seemed to have more difficulties in others. Systems limits are questions of architectural structures and software. Applications limits are a question of how computer architectures and the organization of the system affect the kinds of algorithms and problems that you can put on those systems. Also, there are financial and business limits, as well as policy limits, that we need to understand.

Questions

Finally, I would like to pose a few questions for us to ponder during this conference. I think we have to address in an analytical way our ability to remain superior in supercomputing. Has our progress been satisfactory? Are we meeting the high-performance computing needs of science, industry, and government? What should be the government's role in high-performance computing?

Do we have a balanced program? Is it consistent? Are there some show-stoppers in it? Is it balanced with other scientific programs that the U.S. has to deal with? Is the program aggressive enough? What benefits will result from this investment in our country?

The Gartner report addresses this last question. What will the benefits be if we implement the federal High Performance Computing Initiative?

Finally, I want to thank all of you for coming to this conference. I know many of you, and l know that you represent the leadership in this business. I hope that we will have a very successful week.

Current Status of Supercomputing in the United States

Erich Bloch

Erich Bloch serves as a Distinguished Fellow at the Council on Competitiveness. Previously, he was the Director of the National Science Foundation. Early in his career, in the 1960s, Erich worked with the National Security Agency as the Program Manager of the IBM Stretch project, helping to build the fastest machine that could be built at that time for national security applications. At IBM, Erich was a strong leader in high-performance computing and was one of the key people who started the Semiconductor Research Cooperative.

Eric is chairman of the new Physical Sciences, Math, and Engineering Committee (an organ of the Federal Coordinating Committee on Science, Engineering, and Technology), which has responsibility for high-performance computing. He is also a member of the National Advisory Committee on Semiconductors and has received the National Medal of Technology from the President.

I appreciate this opportunity to talk about supercomputing and computers and technology. This is a topic of special interest to you, the National Science Foundation, and the nation.

But it is also a topic of personal interest to me. In fact, the Los Alamos Synchrotron Laboratory has special meaning for me. It was my second home during the late fifties and early sixties, when I was manager of IBM's Stretch Design and Engineering group.

How the world has changed! We had two-megabit—not megabyte—core memories, two circuit/plug-in units with a cycle time of 200 nanoseconds. Also, in pipelining, we had the first "interrupt mechanisms" and "look-ahead mechanisms."

But some things have stayed the same: cost overruns, not meeting specs, disappointing performance, missed schedules! It seems that these are universal rules of supercomputing.

But enough of this. What I want to do is talk about the new global environment, changes brought about by big computers and computer science, institutional competition, federal science and technology, and policy issues.

The Global Imperative

Never before have scientific knowledge and technology been so clearly coupled with economic prosperity and an improved standard of living. Where access to natural resources was once a major source of economic success, today access to technology—which means access to knowledge—is probably more important. Industries based primarily on knowledge and fast-moving technologies—such as semiconductors, biotechnology, and information technologies—are becoming the new basic industries fueling economic growth.

Advances in information technologies and computers have revolutionized the transfer of information, rendering once impervious national borders open to critical new knowledge. As the pace of new discoveries and new knowledge picks up, the speed at which knowledge can be accessed becomes a decisive factor in the commercial success of technologies.

Increasing global economic integration has become an undeniable fact. Even large nations must now look outward and deal with a world economy. Modern corporations operate internationally to an extent that was undreamed of 40 years ago. That's because it would have been impossible to operate the multinational corporations of today without modern information, communications, and transportation technologies.

Moreover, many countries that were not previously serious players in the world economy are now competitors. Global economic integration has been accompanied by a rapid diffusion of technological capability in the form of technically educated people. The United States, in a dominant position in nearly all technologies at the end of World War II, is now only one producer among many. High-quality products now come from

countries that a decade or two ago traded mainly in agricultural products or raw materials.

Our technical and scientific strength will be challenged much more directly than in the past. Our institutions must learn to function in this environment. This will not be easy.

Importance of Computers—The Knowledge Economy

Amid all this change, computing has become a symbol for our creativity and productivity and a barometer in the effort to maintain our competitive position in the world arena. The development of the computer, and its spread through industry, government, and education, has brought forth the emergence of knowledge as the critical new commodity in today's global economy. In fact, computers and computer science have become the principal enabling technology of the knowledge economy.

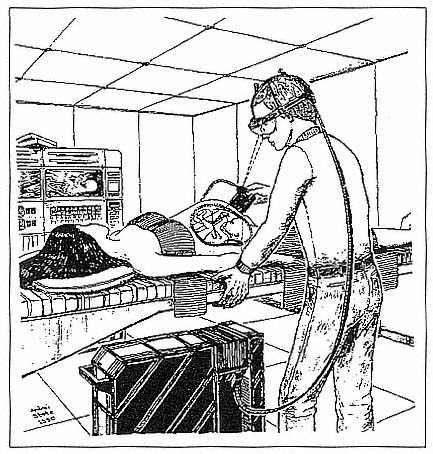

Supercomputers, in particular, are increasingly important to design and manufacturing processes in diverse industries: oil exploration, aeronautics and aerospace, pharmaceuticals, energy, transportation, automobiles, and electronics, just to name the most obvious examples. They have become an essential instrument in the performance of research, a new tool to be used alongside modeling, experimentation, and theory, that pushes the frontiers of knowledge, generates new ideas, and creates new fields. They are also making it possible to take up old problems—like complex-systems theory, approaches to nonlinear systems, genome mapping, and three-dimensional modeling of full aircraft configurations—that were impractical to pursue in the past.

We are only in the beginning of a general exploitation of supercomputers that will profoundly affect academia, industry, and the service sector. During the first 30 years of their existence, computers fostered computer science and engineering and computer architecture. More recently, we have seen the development of computational science and engineering as a means of performing sophisticated research and design tasks. Supercomputer technology and network and graphics technology, coupled with mathematical methods for algorithms, are the basis for this development.

Also, we have used the von Neumann architecture for a long time. Only recently is a new approach in massive parallelism developing. The practical importance of supercomputers will continue to increase as their technological capabilities advance, their user access improves, and their use becomes more simple.

Computers—A Historic Perspective

Let's follow the development of computing for a moment. The computer industry is an American success story—the product of our ingenuity and of a period of unquestioned market and technological leadership in the first three and a half decades after World War II.

What did we do right?

First, we had help from historical events. World War II generated research needs and a cooperative relationship among government, academia, and the fledgling computer industry. Government support of computer research was driven by the Korean War and the Cold War. Federal funding was plentiful, and it went to commercially oriented firms capable of exploiting the technology for broader markets.

But we had other things going for us as well. There were important parallel developments and cross-feeding between electronics, materials, and electromechanics. There was a human talent base developed during the war. There was job mobility, as people moved from government labs to industry and universities, taking knowledge of the new technologies with them.

There was also a supportive business climate. U.S. companies that entered the field—IBM, Sperry Corporation, National Cash Register, Burroughs—were able to make large capital investments. And there was an entrepreneurial infrastructure eager to exploit new ideas.

Manufacturing and early automation attempts had a revolutionary impact on the progress of computer development. It's not fully appreciated that the mass production of 650s, 1401s, and later, 7090s and 360s set the cost/performance curve of computers on its precipitous decline and assured technology preeminence.

Industry leaders were willing to take risks and play a hunch. Marketing forecasts did not justify automation; IBM proceeded on faith and demonstrated that the forecasts were consistently on the low side. A typical assessment of the time was that "14 supercomputers can satisfy the world demand."

We had another thing going for us—our university research enterprise. Coupling research and education in the universities encouraged human talent at the forefront of the computer field and created computer departments at the cutting edge of design and construction: Illinois, MIT, IAS, and the University of Pennsylvania.

Clearly, it was the right mix of elements. But there was nothing inevitable about our successful domination of the field for the last 30 years. That was partly attributable to the failures of our competitors.

England provides a good case study of what can go wrong. It had the same basic elements we had:

• the right people (Turing, Kilburn);

• good universities (Manchester, Cambridge, Edinburgh); and

• some good companies (Ferranti, Lyons).

So why did it not compete with us in this vital industry? One reason, again, is history. World War II had a much more destructive effect on Britain than on us. But there were more profound reasons. The British government was not aggressive in supporting this new development. As Kenneth Flam points out, the British defense establishment was less willing than its American counterpart to support speculative and risky high-tech ventures.

The British government did not assume a central role in supporting university research. British industry was also more conservative and the business climate less favorable. The home market was too small; industry was unable to produce and market a rapidly changing technology, and it did not recognize the need to focus on manufacturability. Finally, there was less mobility of talented people between government, industry, and universities. In fact, there was more of a barrier to educating enough people in a new technological world than in the U.S.

Why bring up this old history? Because international competition in computing is greater, and the stakes higher, than ever before. And it is not clear that we are prepared to meet this competition or that our unique advantages of the 1950s exist today:

• Government policy toward high-risk, high-technology industries is less clear than in the 1950s. The old rationale for close cooperation—national defense—is no longer as compelling. Neither is defense the same leading user of high technology it once was.

• The advantage of our large domestic market is now rivaled by the European Economic Community (EEC) and the Pacific Rim countries.

• Both Japan and the EEC are mounting major programs to enhance their technology base, while our technology base is shrinking.

• Japan, as a matter of national policy, is enhancing cooperation between industry and universities—not always their own universities but sometimes ours.

• Industry is less able and willing to take the risk that IBM and Sperry did in the 1950s. The trend today is toward manipulating the financial structure for short-term profits.

• Finally, although the stakes and possible gains are tremendous, the costs of developing new generations of technology have risen beyond the ability of all but the largest and strongest companies, and sometimes of entire industries, to handle.

Corrective Action

What should we do so that we do not repeat the error of Great Britain in the 1950s? Both the changing global environment and increasing foreign competition should focus our attention on four actions to ensure that our economic performance can meet the competition.

First, we must make people—including well-educated scientists and engineers and a technically literate work force and populous—the focus of national policy. Nothing is more important than developing and using our human resources effectively.

Second, we must invest adequately in research and development.

Third, we must learn to cooperate in developing precompetitive technology in cases where costs may be prohibitive or skills lacking for individual companies or a even an industry.

Fourth, we must have access to new knowledge, both at home and abroad.

Let me discuss each of these four points.

Human Resources

People are the crucial resource. People generate the knowledge that allows us to create new technologies. We need more scientists and engineers, but we are not producing them.

In the last decade, employment of scientists and engineers grew three times as fast as total employment and twice as fast as total professional employment. Most of this growth was in the service sector, in which employment of scientists and engineers rose 5.7 per cent per year for the last decade. But even in the manufacturing sector, where there was no growth at all in total employment, science and engineering employment rose four per cent per year, attesting to the increasing technical complexity of manufacturing.

So there is no doubt about the demand for scientists and engineers. But there is real doubt that the supply will keep up. The student population is shrinking, so we must attract a larger proportion of students into science and engineering fields just to maintain the current number of graduates.

Unfortunately, the trend is the other way. Freshman interest in engineering and computer sciences decreased during the 1980s, but it increased for business, humanities, and the social sciences. Baccalaureates in mathematics and computer science peaked in 1986 and have since declined over 17 per cent. Among the physical and biological sciences, interest has grown only marginally.

In addition, minorities and women are increasingly important to our future work force. So we must make sure these groups participate to their fullest in science and engineering. But today only 14 per cent of female students, compared to 25 per cent of male students, are interested in the natural sciences and engineering in high school. By the time these students receive their bachelor's degrees, the number of women in these fields is less than half that of men. Only a tiny fraction of women go on to obtain Ph.Ds.

The problem is even worse among Blacks, Native Americans, and Hispanics at every level—and these groups are a growing part of our population. Look around the room and you can see what I mean.

To deal with our human-resources problem, NSF has made human resources a priority, with special emphasis on programs to attract more women and minorities. At the precollege level, our budget has doubled since 1984, with many programs to improve math and science teachers and teaching. At the undergraduate level, NSF is developing new curricula in engineering, mathematics, biology, chemistry, physics, computer sciences, and foreign languages. And we are expanding our Research for Undergraduates Program.

My question to you is, how good are our education courses in computer science and engineering? How relevant are they to the requirements of future employers? Do they reflect the needs of other disciplines for new computational approaches?

R&D Investment

In the U.S., academic research is the source of most of the new ideas that drive innovation. Entire industries, including semiconductors, biotechnology, computers, and many materials areas, are based on research begun in universities.

The principal supporter of academic research is the federal government. Over the last 20 years, however, we have allowed academic research to languish. As a per cent of gross national product, federal support for academic research declined sharply from 1968 to 1974 and has not yet recovered to the 1968 level. Furthermore, most of the recent growth has occurred in the life sciences. Federal investment in the physical sciences and engineering, the fields that are most critical for competitive technologies, has stagnated. As a partial solution to this problem, NSF and the Administration have pressed for a doubling of the NSF budget by 1993. This would make a substantial difference and is essential to our technological and economic competitiveness.

We must also consider the balance between civilian and defense R&D. Today, in contrast to the past, the commercial sector is the precursor of leading-edge technologies, whereas defense research has become less critical to spawning commercial technology.

But this shift is not reflected in federal funding priorities. During the 1980s, the U.S. government sharply increased its investment in defense R&D as part of the arms buildup. Ten years ago, the federal R&D investment was evenly distributed between the defense and civilian sectors. Today the defense sector absorbs about 60 per cent. In 1987 it was as high as 67 or 68 per cent.

In addition to the federal R&D picture, we must consider the R&D investments made by industry, which has the prime responsibility for technology commercialization. Industry cannot succeed without strong R&D investments, and recently industry's investment in R&D has declined in real terms. It's a moot point whether the reason was the leveraged buyout and merger binge or shortsighted management action or something else. The important thing is to recognize the problem and begin to turn it around.

Industry must take advantage of university research, which in the U.S. is the wellspring of new concepts and ideas. NSF's science and technology centers, engineering research centers, and supercomputer centers are designed with this in mind, namely, multidisciplinary, relevant research with participation by the nonacademic sector.

But on a broader scale, the High Performance Computing Initiative developed under the direction of the Office of Science and Technology Policy requires not only the participation of all concerned agencies and industry but everybody's participation, especially that of the individuals and organizations here today.

Technology Strategy

Since World War II the federal government has accepted its role as basic research supporter. But it cannot be concerned with basic research, only. The shift to a world economy and the development of technology has meant that in many areas the scale of technology development has grown to the point where, at least in some cases, industry can no longer support it alone.

The United States, however, has been ambivalent about the government role in furthering the generic technology base, except in areas such as defense, in which government is the main customer. In contrast, our

foreign competitors often have the advantage of government support, which reduces the risk and assures a long-term financial commitment.

Nobody questions the government's role of ensuring that economic conditions are suitable for commercializing technologies. Fiscal and monetary policies, trade policies, R&D tax and antitrust laws, and interest rates are all tools through which the government creates the financial and regulatory environment within which industry can compete. But this is not enough. In addition, government and industry, together, must cooperate in the proper development of generic precompetitive technology in areas where it is clear that individual companies or private consortia are not able to do the job.

In many areas, the boundary lines between basic research and technology are blurring, if not overlapping completely. In these areas, generic technologies at their formative stages are the base for entire industries and industrial sectors. But the gestation period is long; it requires the interplay with basic science in a back-and-forth fashion. Developing generic technologies is expensive and risky, and the knowledge diffuses quickly to competitors.

If, at one time, the development of generic technology was a matter for the private sector, why does it now need the support of government?

First, it is not the case that the public sector was not involved in the past. For nearly 40 years, generic technology was developed by the U.S. in the context of military and space programs supported by the Department of Defense and the National Aeronautics and Space Administration. But recent developments have undermined this strategy for supporting generic technology:

• As I already said, the strategic technologies of the future will be developed increasingly in civilian contexts rather than in military or space programs. This is the reverse of the situation that existed in the sixties and seventies.

• American industry is facing competitors that are supported by their governments in establishing public/private partnerships for the development of generic technologies, both in the Pacific Rim and in the EEC.

• What's more, the cost of developing new technologies is rising. In many key industries, U.S. companies are losing their market share to foreign competitors—not only abroad but at home, as well. They are constrained in their ability to invest in new, risky technology efforts. They need additional resources.

But let's be clear . . .

The "technology strategy" that I'm talking about is not an "industrial policy." Cooperation between government and industry does not mean a centrally controlled, government-coordinated plan for industrial development. It is absolutely fundamental that the basic choices concerning which products to develop and when must remain with private industry, backed by private money and the discipline of the market. But we can have this and also have the government assume a role that no longer can be satisfied by the private sector.

Cooperation is also needed between industry and universities in order to get new knowledge moving smoothly from the laboratory to the market. Before World War II, universities looked to industry for research support. During and after the war, however, it became easier for universities to get what they needed from the government, and the tradition slowly grew that industry and universities should stay at arm's length. But this was acceptable only when government was willing to carry the whole load, and that is no longer true. Today, neither side can afford to remain detached.

Better relations between industry and universities yield benefits to both sectors. Universities get needed financial support and a better vantage point for understanding industry's needs. Industry gets access to the best new ideas and the brightest people and a steady supply of the well-trained scientists and engineers it needs.

Cooperation also means private firms must learn to work together. In the U.S., at least in this century, antitrust laws have forced companies to consider their competitors as adversaries. This worked well to ensure competition in the domestic market, but it works less well today, when the real competition is not domestic, but foreign. Our laws and public attitudes must adjust to this new reality. We must understand both that cooperation at the precompetitive level is not a barrier to fierce competition in the marketplace and that domestic cooperation may be the prerequisite for international competitive success.

The evolution of the Semiconductor Manufacturing Technology Consortium is a good example of how government support and cooperation with industry leads to productive outcomes.

International Cooperation

Paradoxically, we must also strengthen international cooperation in research even as we learn to compete more aggressively. There is no confining knowledge within national or political boundaries, and no nation can afford to rely on its own resources for generating new

knowledge. Free access to new knowledge in other countries is necessary to remain competitive, but it depends on cooperative relationships.

In addition, the cost and complexity of modern research has escalated to the point where no nation can do it all—especially in "big science" areas and in fields like AIDS, global warming, earthquake prediction, and nuclear waste management. In these and other fields, sharing of people and facilities should be the automatic approach of research administrators.

Summary

My focus has been on the new global environment; the changes brought about by computers and computer science; international competition, its promise and its danger; and the role of government. But more important is a sustained commitment to cooperation and to a technical work force—these are the major determinants of success in developing a vibrant economy.

In the postwar years, we built up our basic science and engineering research structure and achieved a commanding lead in basic research and most strategic technologies. But now the focus must shift to holding on to what we accomplished and to building a new national technology structure that will allow us to achieve and maintain a commanding lead in the technologies that determine economic success in the world marketplace.

During World War II, the freedom of the world was at stake. During the Cold War, our free society was at stake. Today it is our standard of living and our leadership of the world as an economic power that are at stake.

Let me leave you with one thought: computers have become a symbol of our age. They are also a symbol and a barometer of the country's creativity and productivity in the effort to maintain our competitive position in the world arena. As other countries succeed in this area or overtake us, computers can become a symbol of our vulnerability.

2—

TECHNOLOGY PERSPECTIVE

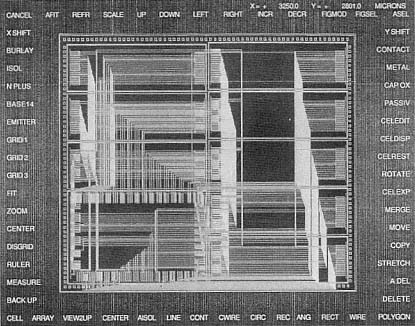

This session focused on technology for supercomputing—its current state, projections, limitations, and foreign dependencies. The viability of the U.S. semiconductor industry as a source of parts was considered. The possible roles of gallium arsenide, silicon, superconductive, and electro-optical technologies in supercomputers were discussed. Packaging, cooling, computer-aided design, and circuit simulation were also discussed.

Session Chair

Robert Cooper,

Atlantic Aerospace Electronics Corporation

Overview

Robert Cooper

Robert Cooper is currently the President, CEO, and Chairman of the Board of Atlantic Aerospace Electronics Corporation. Previously, he served simultaneously as Assistant Secretary of Defense for Research and Technology and Director of the Defense Advanced Research Projects Agency (DARPA). Under his directorship, DARPA moved into areas such as programs in advanced aeronautical systems, gallium arsenide microelectronic circuits, new-generation computing technology, and artificial intelligence concepts. Bob has also been the Director of the NASA Goddard Space Flight Center and the Assistant Director of Defense Research at MIT's Lincoln Laboratory. Bob holds a doctorate from MIT in electrical engineering and mathematics.

When I was at Goddard, we started the first massively parallel processor that was built, and it subsequently functioned at Goddard for many, many years. Interestingly enough, as I walked into this room to be on this panel, one of the folks who was on that program sat down next to me and said that he remembered those days fondly.

I'm really quite impressed by this group, and I subscribe to the comment that I heard out in the hallway just before the first session. One person was talking to another and said that he had never seen such a high concentration of computing genius in one place since 1954 at the Courant Institute, when John von Neumann dined alone. Be that as it may, I am nevertheless confident that if anything can be made to happen in the

high-end computer industry in this country, this group can play a key role in making it happen.

That comment also goes for the panel today, which is going to attack the problems of technology and perspectives for the future. We actually are starting this conference from a technical perspective by looking at the future—considering the prospects for computation—rather than looking toward the past, as we did in the first session.

Before we get started with our first speaker, I'd like to say a couple of words about what I see happening to the technology of high-end computing in the U.S. and in the world. Basically, the enabling technologies for high-end computing are the devices themselves. The physical constraints are the things that you will hear a lot about in this session: the logic devices; the memory devices; the architectural concepts, to a certain extent, which are determined by how you can fit these things together; and the interconnect technologies.

The main issue with technology developments in this area in this country is that we are somehow unable to take advantage of all of these things at the scale required to put large-scale systems together, and that is one of the reasons why we started the Strategic Computing Initiative back in 1983 at the Defense Advanced Research Projects Agency (DARPA), and that is why I think we are all hanging so much hope on the High Performance Computing Initiative that has come out of the study activity at DARPA and at the Office of Science and Technology Policy since about 1989.

I think it is the technology transition problem that we have to face. There is a role for government and a role for industry in the transition. I have been associated with some companies recently who have tried to take technology that they developed or that was somewhat common in the industry and make products out of it. I think that before we finish this particular session, we should talk about the issue of technology transition.

Supercomputing Tools and Technology

Tony Vacca

Tony Vacca is the Vice President of Technology at Cray Research, Inc., and has responsibility for product and technology development beyond Cray's C90 vector processor. Tony has had over 20 years' experience with circuit design, packaging, and storage. He began his career working at Raytheon Company as a design engineer, thereafter joining Control Data Corporation. From 1981 to 1989, he was the leader of the technology group at Engineering Technology Associates Systems. Tony has a bachelor of science degree in electrical engineering from the Michigan Technological Institute and has done graduate work at Northeastern and Stanford Universities.

The supercomputer technologies, or more generally, high-performance computer technologies, cover a broad spectrum of requirements that have to be looked at simultaneously at any given time to meet the goals, which are usually schedule-driven.

From a semiconductor perspective, the technologies fall into four classes: silicon, gallium arsenide, the superconductor, and the optical. In parallel, we have to look simultaneously at such things as computer-aided design tools, under which is a category of elements that get increasingly important as microminiaturization and scaling of integration rise.

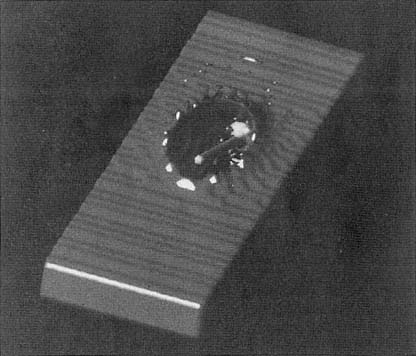

Also, we have to look at the packaging issues, and there are a lot of computer-aided design tools that are helping us in that area. As was

discussed earlier, the issue of thermal management at all levels is very crucial, but the need for performance still dominates; we have to keep that in perspective.

Silicon is a very resilient technology, and every time it gets challenged it appears to respond. There are a lot of challenges to silicon, but I don't see many candidates in the near future that are more promising in the area of storage, especially dynamic storage, and possibly in some forms of logic.

Gallium arsenide has struggled over the last 10 years and is finally coming out as a "real" technology. Gallium arsenide has sent some false messages in some forms because some of the technology has focused not on performance but on power consumption. When it focuses on both, it will be much more effective for us. Usually when we are applying these technologies, we have to focus on the power and the speed simultaneously, especially because we are putting more processors on the floor.

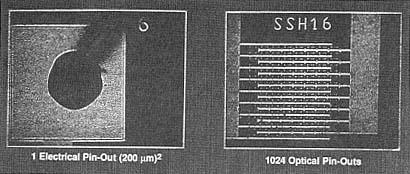

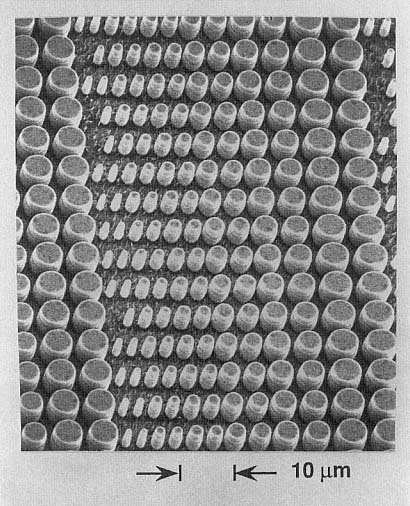

The optical technology, from our viewpoint, has been used a lot in the communications between various mediums. When people talk about multigigahertz operations, I have some difficulty because I'm fighting to get 500-megahertz, single-bit optics in production from U.S. manufacturers. When people talk about the ability of 20-, 50-, 100-, and 500-gigabit-per-second channels, I believe that is possible in some form, but I don't know how producible the concept is.