2

Preconditions for Interaction

When my grandfather George had a stroke he was led into the house and put to bed, and the Red Men sent lodge brothers to sit with him to exercise the curative power of brotherhood…. Ida Rebecca called upon modern technology to help George. From a mail-order house she ordered a battery-operated galvanic device which applied the stimulation of low-voltage electrical current to his paralysed limbs…. In Morrisonville death was a common part of life. It came for the young as relentlessly as it came for the old. To die antiseptically in a hospital was almost unknown. In Morrisonville death still made house calls.

Russell Baker Growing Up

Journalist Russell Baker's description of his grandfather's death is typical of American health care in the 1920s.[1]

Russell Baker, Growing Up (New York: New American Library, 1982), 36-38.

The technological changes that characterized agricultural and industrial production had not yet come to medicine.[2]Stephen Toulmin, "Technological Progress and Social Policy: The Broader Significance of Medical Mishaps," in Mark Siegler et al., eds., Medical Innovation and Bad Outcomes: Legal, Social, and Ethical Responses (Ann Arbor: Health Administration Press, 1987), 22.

Physicians had limited knowledge, and commonly prescribed therapies were improved diets, more exercise, and cleaner environments, not the highly technological interventions customary today.[3]Selma J. Mushkin, Lynn C. Paringer, and Milton M. Chen, "Returns to Biomedical Research, 1900-1975: An Initial Assessment of Impacts on Health Expenditures," in Richard H. Egdahl and Paul M. Gertman, eds., Technology and the Quality of Health Care (Germantown, Md.: Aspen Systems, 1978).

Doctors held out little hope for treatment of most illnesses; consumers like Baker's grandmother often turned to folk remedies or miracle cures. Hospitals were shunned as places where destitute people without family went to die.[4]Charles E. Rosenberg, Caring for Strangers: The Rise of America's Hospital System (New York: Basic Books, 1987). This is a comprehensive study of American hospitals from 1800 to 1920.

Government played a negligible role in health care, and any costs of treatment would havebeen borne by the Baker family, or the treatment foregone if money were not available.

During the first four decades of the twentieth century, innovation in health sciences did occur. Important breakthroughs included improved aseptic surgery techniques, sulfa drugs, and vaccines to treat, and prevent the spread of, infectious diseases. The nascent medical device industry assisted in the advancement of medical science. There were increasingly sophisticated scopes for observation of internal bodily functions and advances in the laboratory equipment that permitted precise measurement of biochemical phenomena. However, quack devices proliferated in addition to legitimate innovations. Many fraudulent devices promising miracle cures capitalized on new discoveries in the fields of electricity and magnetism. The galvanic device purchased to treat Baker's grandfather was typical of popular quack products of the time. Unfortunately for the industry as a whole, these quack devices contributed to the public perception that medical devices were marginal to advanced medical care.

This chapter illustrates how individuals and firms overcame structural and scientific barriers to innovation in the private sector. Various private individuals and corporations managed to bridge these gaps. As a result, the medical device sector enjoyed modest and steady growth.

What is most interesting to the modern reader is the limited role that government played in medical technology innovation. However, seeds of the subsequent multiple roles of government were sown in this early period. By the eve of World War II, several public institutions had been created that would later significantly affect device development. The National Institutes of Health (NIH) would promote medical discovery; the Food and Drug Administration (FDA) would inhibit it. Government involvement in medical device distribution, however, did not occur until somewhat later. World War II accelerated the process of device innovation because of government involvement in the war effort; it both created a demand for medical innovations and overcame the public's reluctance to accept a government role in health policy.

The foundation for subsequent business-government interaction

in medical device innovation had been laid. To use our analogy, the preconditions for later prescriptions to treat our patient were set; full-blown medical intervention awaited the 1950s.

Taking the Patient's History: Industry Overview, 1900–1940

Barriers to Device Innovation in the Private Sector

Throughout the eighteenth and nineteenth centuries there was no continuous scientific tradition in America. Medical science depended upon foreign discoveries, which were dominated by Britain in the early nineteenth century, France at midcentury, and Germany in the last half of the century. The lack of research has been attributed to the dearth of certain conditions and facilities essential to medical studies.[5]

Richard H. Shyrock, American Medical Research, Past and Present (New York: The Commonwealth Fund, 1947).

The absence of dynamic basic science obviously limited the possibility of technological breakthroughs.However, the early part of the twentieth century witnessed a rise in private sector commitment to basic medical science. Philanthropists, intrigued by possibilities of improvements in health sciences, began to endow private research facilities. The first was the Rockefeller Institute, founded in New York City in 1902. A number of other philanthropic foundations were established in the Rockefeller's wake, including the Hooper Institute for Medical Research, the Phipps Institute in Philadelphia, and the Cushing Institute in Cleveland. This period has been called the "Era of Private Support."[6]

Ibid., 99.

These efforts improved the scientific research base in America, but it was still weak.Compounding this weakness were barriers between basic science and medical practice. During the nineteenth century, practicing physicians had little concern for, or interest in, medical research. Most doctors practiced traditional medicine, relying on their small arsenal of tried-and-true remedies. However, in the first decades of the twentieth century, medicine began to change profoundly, which led to organizational permutations through which the medical profession became more scientific

and rigorous.[7]

Paul Starr, The Social Transformation of American Medicine (New York: Basic Books, 1982), 79-145. This comprehensive study of American medical practice is a classic.

University medical schools began to grow as the need for integration of medical studies and basic sciences was acknowledged. Support for medical schools tied research to practice and rewarded doctors for research based medical education. By the end of this period, then, many of the problems associated with lack of basic medical science research had begun to be addressed. However, by modern standards, the commitment to research was extremely small.As our discussion of innovation revealed, basic scientific research must be linked to invention and development. In other words, there must be mechanisms by which technology is transferred from one stage in the innovation continuum to another. In the nineteenth century, there were serious gaps between basic medical science and applied engineering, which is an essential part of medical device development. American engineering education rarely involved research.[8]

Leonard S. Reich, The Making of American Industrial Research: Science and Business at GE and Bell, 1876-1926 (Cambridge: Cambridge University Press, 1985), 24.

Engineers were trained in technical schools but had few university contacts thereafter. Furthermore, manufacturers had little patience for the ivory towers of university science; they looked instead for profits in the marketplace. Engineering practitioners emphasized applying knowledge to the design of technical systems. Thus, engineers based in private companies were cautious about the developments of advanced technology, preferring to make gradual moves in known directions.[9]Ibid., 240.

Producers were slow to take advantage of any advances in research in the United States or abroad. Established industries tended to ignore science and to depend on empirical inventions for new developments.[10]John P. Swann, American Scientists and the Pharmaceutical Industry: Cooperative Research in Twentieth-Century America (Baltimore: Johns Hopkins University Press, 1988).

Patents for medical products did accelerate at the turn of the century, but a majority of them represented engineering shortcuts, not true innovations.[11]Shyrock, American Medical Research, 145.

A few very large corporations addressed this problem through the creation of in-house research laboratories that combined basic science and applied engineering. These institutions focused primarily on incremental product development, but innovative technologies did appear. Thus, while the gap between universities and corporations did not close, some firms became research-oriented through the establishment of independent laboratories. Companies with large laboratories included American

Telephone and Telegraph and General Electric (GE). One major medical device, the X-ray, emerged from GE's research lab. It will be described at greater length shortly.

Further barriers to product development arose from the uneasy relationship between product manufacturers and both university researchers and medical practitioners. A rash of patents were applied for following the development of aseptic surgery. Between 1880 and 1890, the Patent Office granted about 1,200 device patents. The debate that raged over patents of medical products illustrates the tension between health care and profits. Universities generally resisted patenting innovations for several reasons. They valued the free flow of scientific knowledge among scholars. They faced hard questions raised about how to allocate profits of the final result because of the interconnectedness of basic scientific research. They were also concerned that a profit orientation within academia would discourage the practice of sharing scientific knowledge for the betterment of all.[12]

The debate about the appropriate role of academic scientists within universities and about private sector profits continues to this day. For a discussion of the current debate and the relevant public policy on these issues, see chapter 3.

Innovations produced by medical inventors raised additional ethical problems. Often the physician-inventors stood by while commercial organizations exploited remedies based on their work. The medical profession had a traditional ethic against profiting from patents, and the debates about the ethics of patenting medical products continued through this period.[13]

Shyrock, American Medical Research, 143.

For example, the Jefferson County Medical Society of Kentucky stated that it "condemns as unethical the patenting of drugs or medical appliances for profit whether the patent be held by a physician or be transferred by him to some university or research fund, since the result is the same, namely, the deprivation of the needy sick of the benefits of many new medical discoveries through the acts of medical men."[14]Ibid., 122.

Dr. Chevalier Jackson, a noted expert in diseases of the throat, developed many instruments to improve diagnostic and surgical techniques. In his autobiography in 1938, he wrote:

When I became interested in esophagoscopy, direct laryngoscopy, and a little later in bronchoscopy, the metal-working shop at home became a busy place. In it were worked out most of the mechanical problems of foreign body endoscopy. Sometimes the finished instrument was made. At other times only the models were made to demonstrate to the instrument maker the problem and the method

of solution. This had the advantage that the instrument was ever afterward available to all physicians. No instrument that I devised was ever patented. It galled me in early days, when I devised my first bronchoscope, to find that a similar lamp arrangement had been patented by a mechanic for use on a urethroscope, and the mechanic insisted that the use of such an arrangement on a bronchoscope was covered. Caring nothing for humanity, the patentee threatened a lawsuit unless his patent right was recognized during the few remaining years it had left to run.[15]

Chevalier Jackson, The Life of Chevalier Jackson: An Autobiography (New York: Macmillan, 1938), 197.

Not all physicians and scientists shared these views, but there was clearly a conflict between the perception of medical technology for the public good and the pursuit of profits, one that created a possible barrier to a full exploitation of the marketplace.

The last barrier to innovation in the medical field was the absence of a sizable market for the available products. In 1940, on the eve of World War II, total spending on health care in the United States amounted to $3.987 billion, or $29.62 per person per year. Health care spending accounted for only 4 percent of GNP. Patients paid directly for about 85 percent of costs. A small number of people received public assistance in city or county hospitals; there was virtually no private insurance coverage.[16]

Department of Commerce, Bureau of the Census, Historical Statistics of the United States, Colonial Times to 1970, bicentennial edition, pt. 2, ser. 221, 247 (Washington, D.C.: GPO, 1975).

There was no buffer for individuals and families in hard times, and health care was often sacrificed when family income was limited.The market for medical technology remained small by modern standards. The number of hospital beds was low in comparison to present population-to-bed ratios. Doctors' offices had little technological equipment. Fortunately, the small market did not deter researchers in some cases. For example, a 1914 study by engineers at General Electric concluded that new X-ray tubes would be more expensive than earlier ones and that the market would be too small to justify manufacture. Nonetheless, the management insisted that production proceed, noting that "the tube should be exploited in such a way as to confer a public benefit, feeling that it is a device which is useful to humanity and that we cannot afford to take an arbitrary or even perhaps any ordinary commercial position with regard to it."[17]

Reich, American Industrial Research, 89, citing George Wise, The Corporations' Chemist (Unpublished manuscript, 1981), 237.

Because of this altruistic view, General Electric soon dominated

the X-ray market and later turned a tidy profit on the enterprise. Obviously, however, most producers and inventors could not afford to ignore "ordinary commercial" considerations.

Bridging the Gaps

Innovation did occur despite the severe limitations in the private sector. Several examples of device innovation illustrate how these limitations were overcome.

Individual Initiative

One way to overcome institutional barriers to technology transfer was for an individual to fulfill several essential roles. Arnold Beckman, the founder of Beckman Instruments, was a scientist, engineer, and entrepreneur and thus had expertise in all the necessary stages of innovation.[18]

Most of the information on Arnold Beckman and the founding of his company appears in Harrison Stephens, Golden Past, Golden Future: The First Fifty Years of Beckman Instruments, Inc. (Claremont, Calif.: Claremont University Center, 1985).

As an assistant professor of chemistry at the California Institute of Technology in the early 1930s, he was called upon to solve technical problems that involved chemistry and then engaged in entrepreneurial activities with the results. His first business venture was to create a special ink formula that would not clog the inking mechanism in a postal meter. Because existing companies would not make the special formula, Beckman founded the National Inking Appliance Company in 1934.Beckman soon undertook additional projects with applications for science and medicine. At the request of agricultural interests, he invented a meter to measure the acidity of lemon juice that had been heavily dosed with sulfur dioxide. Using electronic skills he had developed at Bell Labs as a graduate student, Beckman designed the first acidimeter to measure the acidity or alkalinity of any solution containing water. He formed Beckman Instruments in 1935 to produce these acidimeter and discovered a market among some of his former professors at a meeting of the American Chemical Society.

In recalling the founding of his company, Beckman noted, "We were lucky because we came into the market at just the time that acidity was getting to be recognized as a very important

variable to be controlled, whether it be in body chemistry or food production."[19]

Stephens, Golden Past, 14.

In 1939 he quit teaching to run the business fulltime. Beckman's substantial contribution to the war effort, and the benefits the firm reaped as a consequence, will be discussed in a subsequent section. His company later became one of the major medical technology firms in America.[20]Much of the history of Arnold Beckman's contributions were summarized in Carol Moberg, ed., The Beckman Symposium on Biomedical Instrumentation (New York: Rockefeller University, 1986). This volume celebrates the fiftieth anniversary of the founding of Beckman Instruments.

Beckman's career illustrates how a multifaceted individual, with scientific, technical, and marketing skills, could successfully produce scientific instruments despite the significant barriers to innovation in the early twentieth century.Industrial Research Laboratories

Industrial research laboratories also served as bridges between science and technology and the market. Generally set apart from production facilities, these laboratories were staffed by people trained in science and advanced engineering and who were working toward an understanding of corporate related science and technology.[21]

Reich, American Industrial Research, 3.

By setting up labs, large firms could conduct scientific research internally and profit from the discoveries. These laboratories were possible only after the period of consolidations and mergers in the late 1890s and early 1900s that led to the growth of industrial giants that had sufficient resources to fund them. Research was vital, and product development formed a large part of their competitive strategy. These large firms were well aware of scientific developments in Europe, particularly in the fields of electrochemistry, X-rays, and radioactivity, and they knew they had to stay abreast of technological change.[22]Ibid., 37.

General Electric Company, founded in 1892, created a research laboratory that was firmly established within the company by 1910. William Coolidge led research efforts on the X-ray tube, which had been discovered in Germany in the 1890s. It was widely known that X-rays had medical value in that they allowed doctors to observe bone and tissue structure without surgery. Two-element, partially evacuated tubes generated the X-rays. As they operated, the tubes produced more gas, changing the pressure and making them erratic. Coolidge substituted tungsten for platinum. It could be heated to a greater temperature without

melting, while emitting less gas than platinum to achieve higher-powered and longer-lived tubes.

By 1913, the laboratory was manufacturing Coolidge's X-ray tubes on a small scale, selling 300 that year and 6,500 in 1914.[23]

Kendall Birr, Pioneering in Industrial Research: The Story of the General Electric Research Laboratory (Washington, D.C.: Public Affairs Press, 1957).

When the government began to place large orders of tubes for portable X-ray units for military hospitals during World War I, GE began to make significant profits. After the war, GE's management decided that the company would become a full-line X-ray supplier. GE bought the Victor X-ray company, switched its production of Coolidge tubes to Victor, and soon held a dominant position in the new and increasingly profitable medical equipment supply business.[24]The government's role in World War I represents the beginning of a transition to government involvement in medical device innovation. In this case, the government's demand for X-ray equipment as a purchaser significantly benefited the firm.

Problems of Medical Quackery

Along with these exciting breakthroughs in medical technology, a very different side of the industry flourished. The popularity of fraudulent devices, and concern about the consequences of that fraud, tainted public perception of the industry. Many people associated medical devices with quack products.[25]

The term quack dates to the sixteenth century and is an abbreviation for quacksalver. The term refers to a charlatan who brags or "quacks" about the curative or "salving" powers of the product without knowing anything about medical care. From the Washington Post, 8 July 1985, 6.

When medical science offers no hope of treatment or cure, people have traditionally turned to charms and fetishes for help.[26]

Warren E. Schaller and Charles R. Carroll, Health Quackery and the Consumer (Philadelphia: W. B. Saunders, 1976), 228. This volume contains many descriptions of a variety of fraudulent devices. It makes for amusing reading, but deceptive activities left a lasting legacy on the medical device industry.

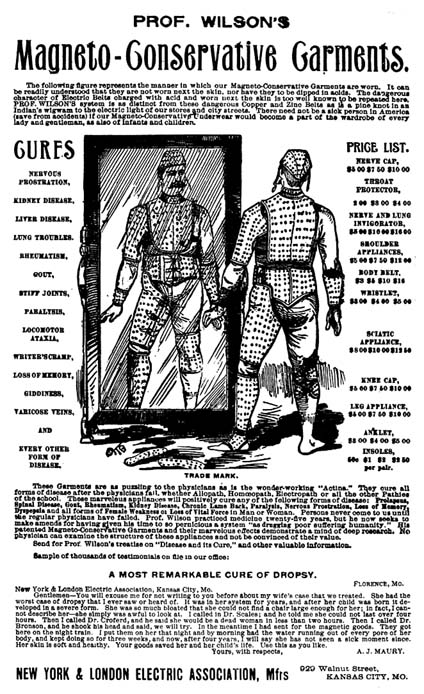

The desperate search for cures was a major factor in the success of all forms of health fraud, including tonics, drugs, potions, and quack devices. Indeed, it is estimated that the public spent $80 million in 1906 on patent medicines of all kinds. The proliferation of quack devices helped to generate subsequent government intervention to protect the public.Innovative developments in scientific fields, such as electromagnetism and electricity, were often applied to lend credence to these health frauds (see figure 5). At the turn of the century, Dr. Hercules Sanché developed his Electropoise machine to aid in the "spontaneous cure of disease."[27]

James H. Young, Medical Messiahs (Princeton: Princeton University Press, 1967), 243.

This device, consisting of a sealed metal cylinder attached to an uninsulated flexible cord that, in turn, attached to the wrist or ankle, supplied, according to the inventor, "the needed amount of electric force to the system and by its thermal action places the body in a condition to absorb oxygen through the lungs and pores."[28]Ibid.

The Oxydonor was a subsequent "improvement" that added a stick of

Figure 5. A quack device.

Source: The Bakken Archives.

carbon to the sealed, and incidentally hollow and empty, cylinder and "cured all forms of disease." The success of the Oxydonor spawned a whole cadre of imitations. There was a death knell for these "pipe and wire" therapies when the inventor of the Oxypathor was convicted of mail fraud. Evidence collected at the trial revealed that the company had sold 45,451 Oxypathors at $35 each in 1914. Considering that as late as 1940 per capita spending on health was only $29.62, the consequences of wasted expenditures seem serious.

Another fraud was based on the new field of radio communications. Dr. Albert Abrams of San Francisco introduced his "Radionics" system, which was based on the pseudomedical theory that electrons are the basic biological unit and all disease stems from a "disharmony of electronic oscillation." Dr. Abrams's diagnoses were made by placing dried blood specimens into the Radioscope. Operated with the patient facing west in a dim light, the device purported not only to diagnose illness but also to tell one's religious preference, sex, and race. At the height of its use, over 3,500 practitioners rented the device from Abrams for $200 down and $5 a month.[29]

Schaller and Carroll, Health Quackery, 226.

One of Abrams's followers was Ruth Drown. She marketed the Drown Radio Therapeutic Instrument, which she claimed could prevent and cure cancer, cirrhosis, heart trouble, back pain, abscesses, and constipation. The device used a drop of blood from a patient, through which Drown claimed she could "tune in" on diseased organs and restore them to health. With two drops she could treat any patient by remote control. A larger version of her instrument was claimed to diagnose as well as to treat disease. Thousands of Californians patronized her establishment.[30]

Drown v. U.S., 198 F.2d 999 (1952). Drown was prosecuted for grand larceny and died while awaiting trial. See Joseph Cramp, ed., Nostrums and Quackery and Pseudo-Medicine, vols. 1-3 (Chicago: Press of the American Medical Association).

Unfortunately for legitimate medical device producers, the prevalence of device quackery tainted the public's perception of the industry. Indeed, initial state and federal efforts to regulate the industry were in response to problems of fraud. Device fraud continues, especially for diseases like arthritis and cancer and for weight control.[31]

Concern about fraudulent drugs and devices surfaces periodically. Congress has held hearings investigating health frauds, particularly frauds against the elderly. See, for example, House Select Committee on Aging, Frauds Against the Elderly: Health Quackery, 96th Cong., 2d sess., no. 96-251 (Washington, D.C.: GPO, 1980). In 1984, the FDA devoted only about one-half of 1 percent of its budget to fighting quack products. Under pressure from the outside, it set up a fraud branch in 1985 to process enforcement actions. See Don Colburn, "Quackery: Medical Fraud Is Proliferating and the FDA Can't Seem to Stop It," Washington Post National Weekly Edition, 8 July 1985, 6. For a description of past and present device quackery, see Stephen Barrett and Gilda Knight, eds., The Health Robbers: How to Protect Your Money and Your Life (Philadelphia: George F. Stickley, 1976).

Fraud in the industry can be seen as a market failure that subsequent government prescriptions sought to correct.Industry Status in 1940

Despite the successes of some firms and some individuals, the medical device industry remained relatively small even as late as 1937. The Census of Manufactures, the best source of information about producers, categorized all industrial production into Standard Industrial Codes. Before 1937, the Census contained only one primary industrial category for medical products. This was SIC 3842—surgical appliances and supplies—which included items such as bandages, surgical gauze and dressings, first aid kits, and surgical and orthopedic appliances. Both the number of producers and the value of their sales in SIC 3842 were quite small until the 1930s. For example, in 1914 there were 391 establishments that shipped products valued at about $16.5 million. The number of producers rose to 445 during World War I, dropped to below 300 until the midtwenties, fell further to 250 during the depression, and reached 323 in 1937. Sales reflected the same ebb and flow, dropping from $71 million in 1929 to $51 million in 1933, and recovering to $77 million by 1937.

In the late 1920s a second classification—SIC 3843—was added for dental products and supplies. In the 1937 data, three important classifications emerged. SIC 3693 included X-ray and therapeutic apparatus. Sales of these products had occurred before this time; however, they were buried in other, nonmedical industrial categories. The industries that produced these products engaged primarily in manufacturing X-ray tubes and lamps for ultraviolet radiation. By the 1950s, the name of the code changed to X-ray, electromedical, and electrotherapeutic apparatus, reflecting the growing innovation in this field. By 1937, SIC 3693 accounted for $17 million in sales with forty-six companies producing equipment. A second new code was SIC 3841—surgical and medical instruments—which included products such as surgical knives and blades, hypodermic syringes, and diagnostic apparatus such as ophthalmoscopes. In 1937, thirty-nine companies were producing devices in this code. In that year, records began to be kept for a fifth medical product category, SIC 3851, ophthalmic goods, which included eyeglass frames, lenses,

and industrial goggles and included seventy-nine companies selling about $43 million worth of goods in 1937. In all five relevant SIC codes, there were only 588 establishments with total product shipments valued at $200 million.[32]

In addition to the extensive data gathered by the Census, see R. D. Peterson and C. R. MacPhee, Economic Organization in Medical Equipment and Supply (Lexington, Mass.: D. C. Heath, 1973).

As we have seen, there was some innovation and modest, though inconsistent, growth in the medical device industry from 1900 to 1940. New products emerged despite barriers to technology transfer at the various stages of the innovation continuum. However, innovation was frequently serendipitous, dependent on individuals and not institutionalized sufficiently to ensure a steady stream of progress. Creative entrepreneurs and industrial laboratories did play a role in overcoming obstacles for some technologies. On the other hand, the device industry was plagued with quackery and fraud.

Evolution of Public Institutions, 1900–1940

With the exception of government procurement during World War I, the device industry grew with minimal government involvement. However, intervention was beginning during this period.

There were perceptible shifts in the public's attitude about the appropriate role of the federal government in economic and social issues. Early institutions and policies emerged that would both promote and inhibit medical innovation. How these policies evolved would influence, to a large extent, the contours of the emerging medical device industry.

Government Promotes Discovery

The general aversion toward government involvement in basic research gave way to acceptance of a public role—a change reflected in the creation of the National Institutes of Health (NIH). At the turn of the century, medical practitioners vehemently rejected any government intervention in their domain. In 1900, a well-known physician appeared before a Senate committee and declared that all the medical profession asked of Congress was no interference with its progress.[33]

Shyrock, American Medical Research, 77

Even as late as 1928, Atherton Seidell, a famous chemist, stated, "We [Americans]naturally question governmental participation in scientific matters because we feel that anything having a political flavor cannot be above suspicion."[34]

Quoted in Harden, Inventing the NIH, 3.

But, in the same year, scientist Charles Holmes Herty said, in regard to support of basic science, "I have changed my mind completely, and I feel that the Government should lead in this matter."[35]Ibid., 92.

Early government activities were primarily in the area of public health, not research. The roots of government involvement lie in the federal Hygienic Laboratory, founded in 1902 to implement the Biologics Control Act, one of the earliest pieces of U.S. health legislation. Under this act, the Hygienic Lab inspected vaccine laboratories, tested the purity of the products and issued licenses.[36]

Ibid., 28.

In addition, the Public Health Service was established in 1912 to address applied health issues. Neither institution had real involvement in basic sciences until World War I. On the eve of the war, the relationship between science in the private sector and science in government was in a state of equilibrium, with the private sector supporting basic research as well as some applied work and the federal government devoting its limited revenues to practical scientific work through the Hygienic Lab and the Public Health Service.[37]Ibid., 93.

The war made the government conscious of the value of science, particularly as German superiority in chemical warfare became apparent. Thus, after the war, there was more talk of public and private sector cooperation to promote science. The proposals put forward in this period, however, advocated the control and direction of research under private auspices with very limited government participation. President Coolidge did not believe in marked expansion of the governmental initiatives to promote health; he viewed medicine and science as the provinces of the private sector. In 1928, Coolidge vetoed a bill to create a National Institute of Health that would provide fellowships for research funded by private donations.[38]

Ibid., 127.

He stated, "I do not believe that permanency of appointment of those engaged in the professional and scientific activities of the Government is necessary for progress or accomplishment in those activities or in keeping with public policy."[39]Ibid., 132.

However, the private sector was not willing to come forward single-handed with substantial funds to support this undertaking. One historiannoted that this lack "was to make the concept of government funding less noxious as the years passed and the original hope dimmed"[40]

Ibid., 91.

With the private sector sufficiently unresponsive, a law establishing the National Institute of Health finally passed in 1930. However, NIH expansion into a large-scale facility was nearly twenty years in the future. The NIH remained small but not totally inactive. Although Congress refused to appropriate the maximum amount during the 1930s, the NIH did receive increased monies to expand research into chronic diseases. In 1937, Congress authorized the National Cancer Institute (NCI) with legislation that sped through Congress in record time and that was unanimously supported by the Senate.[41]

For a thorough discussion of the politics of the NIH's role in cancer research, see Richard A. Rettig, A Cancer Crusade: The Story of the National Cancer Act of 1971 (Princeton: Princeton University Press, 1977).

However, most of the NIH's accomplishments up to World War II were responses to public health emergencies. As late as 1935, the president's Science Advisory Board concluded that no comprehensive, centrally controlled research program was desirable except for certain problems related to public health. By 1937, total federal research expenses, spread out among many federal agencies, amounted to only $124 million, and much of that was allocated to natural sciences and nonmedical technology.[42]

Shyrock, American Medical Research, 78-79.

Despite limited funding, the NIH represented a significant change in attitude toward the role of government. During this period, the NIH research orientation was set as well. Much of its early work continued to be in the science of public health, and research support could have moved in a more applied direction. However, the NIH preferred to expand into basic research, primarily in conjunction with universities, and avoided support of applied research. In chapter 3, we shall see how the political commitment to the NIH and its research orientation affected medical device innovation.

Government Inhibits Discovery

Government promotion of medical discovery emerged alongside institutions that would later inhibit discovery. This inhibiting role for government arose in response to problems of fraud and quackery.

Fraud in food and medicine sales was nothing new, but during the 1880s and 1890s concern grew about dangerous foodstuffs in the market. Attention focused on the sale of diseased meats and milk, on adulterated food products such as a combination of inert matter, ground pepper, glucose, hayseed, and flavoring that was sold as raspberry jam. Many nostrums contained dangerous, habit-forming narcotics sold to an uninformed and unsuspecting public.[43]

Oscar E. Anderson, "Pioneer Statute: The Pure Food and Drug Act of 1906," Journal of Public Law 13 (1964): 189-196. See also Oscar E. Anderson, The Health of a Nation: Harvey W. Wiley and the Fight for Pure Food (Chicago: University of Chicago Press, 1958).

States did pass laws to regulate the marketing of harmful products, but these laws were relatively ineffectual because a state could not enforce its regulations against an out-of-state manufacturer. The only recourse was to reach producers indirectly through the local retailers who handled the goods. At the federal level, Congress was not inactive; from 1879, when the House introduced the first bill designed to prevent the adulteration of food, to June 30, 1906, when the Pure Food Law was signed, 190 measures relating to problems with specific foods had been introduced.[44]

Thomas A. Bailey, "Congressional Opposition to the Pure Food Legislation, 1879-1906," American Journal of Sociology 36 (July 1930): 52-64.

A number of important interests marshalled considerable opposition to federal intervention. States' rights Democrats from the South believed that the federal government did not have the constitutional authority to intervene in the private sector.[45]

Ibid.

Some food producers and retailers contended that they could not sell their goods if they had to label all the ingredients. The drug industry joined the fray when a Senate bill extended the definition of drugs to include not only medicines recognized by the United States Pharmacopeia,[46]Since 1820, the United States Pharmacopeia Convention (USPC) has set standards for medications used by the American public. It is an independent, nonprofit corporation composed of delegates from colleges of medicine and pharmacy, state medical associations, and other national associations concerned with medicine. When Congress passed the first major drug safety law in 1906, the standards recognized in the statute were those of the USPC. Its major publication, the United States Pharmacopeia (USP), is the world's oldest regularly revised national compendium. Today it continues to be the official compendia for standards for drugs.

but also any substance intended for the cure, mitigation, or treatment of disease. This definition would bring the proprietary medicines within the scope of the law.[47]Proprietary drugs are those drugs sold directly to the public, and they include patent medicines. The term proprietary indicates that the ingredients are secret, not that they are patented.

The Proprietary Association of America, with trade organizations of wholesale and retail druggists, immediately joined the opposition.Commercial interests could not suppress public opinion after disclosures of adulterated foods in the muckraking press stirred up the progressive fervor. The 1905 publication of Upton Sinclair's The Jungle, which contained graphic images of adulterated food, including a contention that some lard was made out of the bodies of workmen who had fallen into cooking vats, provoked reform. Harvey W. Wiley, chief of the Division of Chemistry in

the Department of Agriculture, became a missionary for reform. He organized the "poison squad" in 1902 and found that the volunteers who restricted their food intake to diets that included a variety of food additives, such as boric acid, benzoate of soda, and formaldehyde, suffered metabolic, digestive, and health problems.[48]

Temin, Taking Your Medicine, 28.

The 1906 Pure Food Act was a compromise among many diverse and competing interests. The act was very different in orientation from the subsequent drug regulation; it was intended to aid consumers in the marketplace, not to restrict access of products to the market. The act, in short, made misrepresentation illegal. A drug was deemed adulterated if it deviated from the standards of the national formularies without so admitting on the label. A drug was considered misbranded if the company sold it under a false name or in the package of another drug or if it failed to identify the presence of designated addictive substances. The authority of the federal government was limited to seizure of the adulterated or misbranded articles and prosecution of the manufacturer.[49]

Pure Food Act, 34 Stat. 674 (1906).

It is important to remember that this was not an effort by the federal government to intervene in medical care decisions. Because there were few effective drugs in 1906 and most were purchased by consumers without the aid of physicians, drug regulation was seen as a part of food regulation, not of health care per se. Inclusion of medical devices—products used for health care but not consumed as food or drugs—does not appear to have been considered during the decades in which Congress debated food and drug legislation.

Nevertheless, the 1906 law initiated the subsequent growth of a federal role in consumer protection. The Department of Agriculture's Division of Chemistry administered the law. It was renamed the Bureau of Chemistry in 1901; its appropriation rose by a factor of five, and the number of employees grew from 110 in 1906 to 425 in 1908.[50]

A. Hunter Dupree, Science in the Federal Government: A History of Policies and Activities to 1940 (Cambridge: Harvard University Press, 1957), 179.

The Agriculture Appropriations Act of 1931 established the Food and Drug Administration (FDA) within the department.[51]Bruce C. Davidson, "Preventive 'Medicine' for Medical Devices: Further Regulation Required?" Marquette Law Review 55 (Fall 1972): 408-455.

It had a budget of $1.6 million and over 500 employees at the time of its name change.Although medical devices were overlooked from 1906 to 1931, several important steps had been taken. First, there was

acceptance of the federal government's role in protecting the public from adulterated and misbranded products. There was also a federal institution in place, the FDA, with expertise in regulation. As problems arose subsequently in other product areas, it was easy to expand the scope of the existing institution to cover medical devices.

Expansion came during the 1930s. W. G. Campbell, the chief of the FDA, and Rexford G. Tugwell, the newly appointed assistant secretary of agriculture, decided to rewrite the legislation in the spring of 1933.[52]

Temin, Taking Your Medicine, 38.

A 1933 report by the FDA first raised the problems related to medical devices.Mechanical devices, represented as helpful in the cure of disease, may be harmful. Many of them serve a useful and definite purpose. The weak and ailing furnish a fertile field, however, for mechanical devices represented as potent in the treatment of many conditions for which there is no effective mechanical cure. The need for legal control of devices of this type is self-evident. Products and devices intended to effect changes in the physical structure of the body not necessarily associated with disease are extremely prevalent and, in some instances, capable of extreme harm. They are at this time almost wholly beyond the control of any Federal statute…. The new statute, if enacted, will bring such products under the jurisdiction of the law.[53]

U.S. Department of Agriculture, Report of the Chief of the Food and Drug Administration (Washington, D.C.: GPO, 1933), 13-14.

An interesting evolution in the FDA's orientation from its earlier conception had begun. The FDA moved from the aegis of agriculture, where food was a primary focus, to the Department of Health, Education, and Welfare (HEW), which was concerned with broader issues of health. This shift made device regulation a logical extension of FDA jurisdiction.

Problems of definition arose, however, in the debates over terminology. In early drafts of the 1906 law, drugs were defined more broadly. There was an effort to capture within the regulatory definition both those products used for the diagnosis of disease and products that were clearly fraudulent, such as antifat and reducing potions that did not purport to treat recognized diseases. Some legislators proposed to include therapeutic devices within the definition of drugs. In Senate hearings on the bill, the FDA chief stated that adding medical devices to the

definition of drugs was intended to extend the scope of the law to include not only products like sutures and surgical dressings but also "trusses or any other mechanical appliance that might be employed for the treatment of disease or intended for the cure or prevention of disease."[54]

Davidson, "Preventive 'Medicine,'" 414.

The legislative debates on the definition are enlightening, not for the quality of the debates per se but for their focus. Indeed, the discussion of the device/drug distinction emerged in a confused context. While Senator Copeland, a major supporter of the bill, spoke to an amendment of the drug definition to include drugs used for "diagnosis" of disease as well as for "cure, mitigation, or treatment," his colleague, Senator Clark, objected to the use of the term drug to describe a device. Senator Clark stated that he did not oppose devices being covered by the law, but to treat them as drugs "in law and in logic and in lexicography is a palpable absurdity."[55]

Ibid., 415.

Because he was raising an issue not relevant to the amendment under debate, the matter was not resolved at that time. However, Senator Copeland had no fundamental objection to separate definitions, and the later versions included a separate paragraph defining devices.Subsequent events made this a very significant distinction indeed. The first bill was introduced in Congress in 1933 and did not become law in the subsequent five-year period. The bill finally got attention following a drug disaster. The Massengill Company, a drug firm, wanted to sell a liquid form of sulfanilamide, one of the new classes of sulfa drugs on the market in tablet form. The company dissolved the drug in a chemical solution and marketed it as Elixir Sulfanilamide in September 1937. The chemical solution, diethylene glycol, was toxic; over one hundred people died from the elixir. The FDA exercised its power to seize as much of the preparation as it could find and managed to retrieve most of it. However, the agency did not have the power to prosecute Massengill for causing the deaths; it could only cite them for failing to label the solution properly. The fine imposed for mislabeling was $26,100.[56]

Temin, Taking Your Medicine, 42.

Public pressure for greater FDA authority arose after this incident. The pending legislation expanded the FDA's power to screen all "new drugs" before they could be marketed. This distinction created a new class of drugs quite separate from

medical devices. The concept of premarket control provided more protection for the consumer than prior labeling and information requirements.

However, this extension of power to regulate "new drugs" had an additional effect on medical devices. They were now included in the final version of the law, but because they were defined separately, the new drug provision did not apply to them. Device producers were subject only to the adulteration and labeling requirements under the law. The emphasis of the lawmakers continued to be on fraudulent devices, not on control of legitimate devices that might have both therapeutic benefit and harmful characteristics.

The FDA's procedural powers over devices were limited to seizure of the misbranded product and prosecution of the producer. It could not initiate regulatory action until a device had entered interstate commerce and then only if it deemed the product improperly labeled ("misbranded") or dangerous ("adulterated").[57]

21 U.S.C. sec. 351-352.

Once the FDA considered a product misbranded or adulterated, it could initiate a seizure action and seek to enjoin the manufacturer from further production of the device.Seizure was the tactic most frequently employed against device producers in subsequent years. The agency had to file a libel action in a district court, alleging a device to be in violation of the law. The FDA seized the devices before trial. The seizure was upheld only if the FDA could prove its charges at trial. The proceeding affected the specific device seized. Only after the agency succeeded in the initial action could it make multiple seizures or move the court to enjoin further production. Of course, multiple seizures were impractical because it was extremely difficult to trace the ultimate location of the condemned devices and virtually impossible to seize them all. Device manufacturers could evade injunction by making insignificant changes in their products and marketing them as "new devices."[58]

See Comptroller General of the United States, Lack of Authority Limits Consumer Protection: Problems in Identifying and Removing from the Market Products Which Violate the Law, B-164031(2) at 18-25 (1972).

The number of trials for fraudulent devices remained small and affected only quack producers at the margins of the industry.When the federal government addressed problems with medical devices, its powers were limited to procedures which had been inadequate for the regulation of harmful drugs. Despite these problems, however, the 1938 law did set an important

precedent. A large, popular federal institution existed to protect the public from harmful products used for health purposes. Devices were generally ignored for nearly forty years, while federal power over the drug marketplace expanded from 1938 to 1962. Not until 1976 did the FDA get jurisdiction to regulate some devices as stringently as new drugs.

Government and Device Distribution

As we have seen, government institutions, primarily the NIH and the FDA, were in place to intervene in the discovery phase of the medical device innovation process. However, the entry of government into the distribution phase lagged behind these developments.

At the beginning of the twentieth century, not much health care was available for the consumer to buy. As we have seen, doctors had a very limited arsenal of treatment options. There were few drugs and fewer medical devices. There was only a primitive understanding of the biological processes of the human body and little that a doctor could do to alleviate illness. Hospitals were used only by the "deserving" poor, who could not be cared for at home but were not candidates for the almshouse. The dismal conditions in hospitals between 1870 and 1920 have been exhaustively documented.[59]

See Rosenberg, Caring for Strangers.

The public sector played only a minor role in support of hospital care. Local governments often supported public hospitals, and some private institutions received local aid. There were no other sources of public funds for hospitals. Although suffering financial woes, a New York City hospital in 1904 would not seek federal support, "No one, in those days, proposed going hat in hand to Albany or Washington."[60]

Starr, Transformation, 148-180.

There were no intermediaries in the costs of sickness for anyone who was not destitute. In the first decades of the twentieth century, Europe began to provide state aid for sickness insurance, but there was no government action to subsidize voluntary expenditures in the United States.[61]

Ibid., 237. Starr attributes European activity to political instability not present in the United States. The American government remained very decentralized, and there was not the political instability that Europe encountered.

There were some progressive reformers that advocated health insurance, both private and public, as early as 1912. However, employers strongly opposed insurance schemes, as did the physicians and labor unions. Themedical profession vigorously decried a health insurance referendum in California, alleging that the proposal was linked to sinister forces in Germany.[62]

Ibid., 245-253.

However, important developments in diagnosis and treatment began to change the attitude of the public toward the desirability of health care. The advent of aseptic surgery and the X-ray machine gave the ill some hope that surgery could cure them. The number of hospitals grew, from 4,000 in 1909 to 7,000 in 1928, and the number of hospital beds expanded from 400,000 to 900,000 as a result. Costs of care began to rise as well. These costs were associated with some new capital equipment, including European innovations for diagnosis and the growth of laboratories.

Unfortunately, the depression in the 1930s meant declines in visits to doctors and hospitals and a consequent drop in the incomes of physicians. Hospitals suffered financially, and at the same time demand increased for free services. Some public welfare payments for medical care were seen as a temporary solution; these continued after the depression.

Important changes were occurring in the market for medical care, but they did not extend to acceptance of federal and state involvement in that market. People had begun to see the value of health care. The middle class no longer was satisfied with attention from the family physician, and the hospital offered important services not available at home. At the same time medical science held out hope, however, the depression made health care inaccessible to large numbers of Americans. There was a perceived need for more health care, but few resources were available to pay for it. Pressure for greater access, particularly by the middle class, would ultimately bring the government into the marketplace. However, the idea of government subsidies met with considerable resistance, and health care took a back seat to other pressing social needs, such as public assistance and welfare.

As America emerged from the Great Depression and approached World War II, significant changes had occurred in the relationship between government and innovation. The NIH was established to promote basic medical science research, which indirectly affected the medical device industry. The federal government

also had made significant steps toward direct regulation of product producers in the 1938 Food, Drug, and Cosmetic Act. The government had not, as yet, committed itself to payment for health care. This development lay ahead.

World War II Accelerates Interaction

As the nation mobilized for war in the 1940s, the federal government became involved in many activities previously left to the private sector. Government leadership in the war effort changed the public perception about its basic role in science and medicine.

Wartime Innovation

The federal government had an effect on all stages of the innovation process in medical devices during the war. Government spending promoted basic science as well as technological invention and development. Government also became a major consumer of both medical technology and military technology, greatly expanding the market for products produced by firms with medical technology expertise.

President Roosevelt established the Office of Scientific Research and Development (OSRD) in 1941, and it had two parallel committees on national defense and medical research. The Committee on Medical Research (CMR) mounted a comprehensive program to address medical problems associated with the war. The government gave 450 contracts to universities and 150 more to research institutes, hospitals, and other organizations. In total, the office spent $15 million and involved some 5,500 scientists and technicians. Government supported achievements included a synthetic atabrine for malaria treatment (which replaced the quinine seized by Japan), therapeutically useful derivatives of blood, and the development of penicillin.[63]

Ibid., 340.

The OSRD was unique because it was organized as an independent civilian enterprise and managed by academic and industrial scientists in equal partnership with the military. In contrast with World War I, where scientists served as military officers under military commanders, the work of OSRD was fully

funded by the government, but scientists worked in their own institutional settings. The research contract model proved to be a flexible instrument in the subsequent partnership between government and private institutions during the postwar period.[64]

Harvey Brooks, "National Science Policy and Technological Innovation," in Ralph Landau and Nathan Rosenberg, eds., The Positive Sum Strategy: Harnessing Technology for Economic Growth (Washington, D.C.: National Academy Press, 1986), 119-167, 123.

Government also let contracts for development of wartime technologies. Some of these efforts benefited device companies directly because they had technologies that could be channeled for military use. Other government efforts promoted technologies that would later prove useful in medical device technology. In addition, the government was a ready market for military and medical supplies. Government purchasing enriched many companies in the instrument business, such as Beckman and GE. Government policy helped to establish a technology base for postwar development and allowed firms to take advantage of the postwar boom.

In addition, federal government spending and greater need for health care for service personnel injured in combat stimulated the medical technology market. The federal government provided medical services for all military personnel—60 percent of all hospital beds were used by the military. Thus government also became a large consumer of medical supplies and equipment.

Medical Device Successes in Wartime

The war provided an impetus to innovation in medical device technology. Three profiles of successful firms—Beckman Instruments, Baxter Travenol, and General Electric—illustrate the effect of government on innovation.

Beckman

Beckman Instruments provides an excellent example of the impact of the war on medical device technology. The National Technical Laboratories, as the firm was called at the time, did not make weapons but did make important military products. Its contribution to the war effort is reflected in sales data: gross sales were thirty-four times larger in 1950 than in 1940.

One key product was Beckman's "Helipot," a unique instrument

for use in radar systems. The U.S. military requested meters built to military specifications for the radar program and able to withstand strong mechanical shocks. Beckman recalled, "I began to get calls from lieutenants and captains and finally from generals and admirals. There were ships that couldn't sail because they didn't have Helipots for their radars."[65]

Stephens, Golden Past, 34.

Beckman himself redesigned the instrument. In the first year of production, the new model accounted for 40 percent of the firm's total profits.Because wartime disrupted supplies of essential products, new markets opened and creativity was welcomed and rewarded. Beckman's spectrophotometer, which used a quartz prism and a newly developed light source and phototube, is a good example. This model, introduced in 1941, could accurately measure the vitamin content of a substance. The war had cut off the supply of cod liver oil, which was a rich source of vitamins A and D, from Scandinavia. Before the Beckman instrument, there was no way to efficiently measure the vitamin content of other foods to plan healthy diets. The Beckman spectrophotometer determined vitamin content precisely in one or two minutes.

Rubber supplies had been cut off by the bombing of Pearl Harbor, and the nation desperately needed a substitute. Supported by the federal Office of Rubber Reserve, Beckman developed infrared spectrophotometers that could detect butadiene, a major ingredient in synthetic rubber. Later on, Beckman was also involved in a government project with the Massachusetts Institute of Technology, working under the Atomic Energy Commission, to develop a recording instrument to monitor radioactivity levels in atomic energy plants.

These new technologies frequently proved to have medical applications. With friends from the California Institute of Technology, Beckman produced oxygen meters for the navy. An anesthesiologist heard about the meter in its development stage and was interested in its use to measure oxygen in infant incubators. If oxygen supplies to a baby are too low, the infant will not thrive; if oxygen levels are too high, it can become blind. This doctor treated his own grandchild with a Beckman meter, feeding oxygen from a tank into a cardboard box and thereby saving the baby's life. However, during the war, hospitals could not

afford oxygen meters. It was twelve years later that hospitals began to purchase them in large numbers.

Baxter Travenol

Baxter Travenol provides another wartime success story. Although the medical theory underlying intravenous (IV) therapy was clearly understood at the outset of the twentieth century, only large research and teaching hospitals could prepare solutions and equipment properly. Even carefully prepared solutions caused adverse reactions, such as severe chills and fevers, because pyrogens produced by bacteria remained in the solutions after sterilization.

In 1931, Idaho surgeon Ralph Falk, his brother, and Dr. Donald Baxter believed they could eliminate the pyrogen problem through controlled production in evacuated containers. When reactions continued to occur in patients, the doctors discovered that pyrogens were present in the rubber infusion equipment used by hospitals. They used disposable plastic tubing to eliminate this source of bacteria and worked with a glass manufacturer to produce a coating that resisted the contamination caused by the deterioration of the bottles that held the solutions.

In 1939 the fledgling company pioneered another medical breakthrough—a container for blood collection and storage. It was the first sterile, pyrogen-free, vacuum-type blood unit for indirect transfusion. It allowed storage of blood products for up to twenty-one days, making blood banking practical for the first time. The enormous demand for IV equipment and blood transfusions during World War II was a boon for Baxter. Its solutions were the only ones approved for wartime use by the U.S. military. Sales dropped dramatically after the war and rose again several years later during the Korean War. These fluctuating fortunes stabilized, and twenty-five years of uninterrupted company growth occurred after 1955 onward.[66]

This information comes from Baxter Travenol Laboratories Public Relations Department. The publication is entitled "The History of Baxter Travenol" and is unpaginated.

General Electric

General Electric was involved in every facet of the war, including building engines for planes, tanks, ships, and submarines. It

provided electrical capacity for large-scale manufacturing and also built power plants, testing equipment, and radio equipment.[67]

John Anderson Miller, Men and Volts at War: The Story of General Electric in World War II (New York: McGraw-Hill, 1947).

GE's activities extended to medical care for combat forces. Innovation was integral to that effort, as well as to the engines of war. "All along the story was the same. The war production job was one of prodigious quantities of all kinds of equipment. But it was also a job of constantly seeking ways to improve that equipment. Only the best was good enough, and the best today might be second best next week."[68]Ibid., 11.

General Electric's war-inspired medical equipment innovations included portable X-ray machines for use on ships and relatively inaccessible stations such as Pacific island hospitals. X-ray machines were also used to screen inductees for tuberculosis, and GE created cost-saving features, including machines that used smaller films. In addition, it built refrigeration and airconditioning systems for blood and penicillin storage. Government purchasing expanded market size. The army bought hundreds of electrocardiograph machines, ultraviolet lamps, and devices for diagnosis and therapy treatments.[69]

Ibid., 189.

The war affected innovation in dramatic ways. At the discovery stage, medical device innovation was stimulated and encouraged. Many technological innovations in materials science, radar, ultrasound, and other advancements had significant medical implications in the postwar period. Government purchasing stimulated the distribution of devices as well. The number of device producers and the value of their shipments grew in every SIC code.

Just as important, but less visible, were the institutional changes that occurred. Before the war, major public institutions had been formed that presaged government intervention in the discovery phase, most notably the NIH and the FDA. Wartime demands also accelerated the general public's acceptance of government involvement in scientific research and new technology. All these forces led to significant government activity in all phases of medical device innovation. The patient soon received extensive treatment.